Why Human Autonomy Collapsed the Moment We Moved Online

This analysis establishes that informed consent—the foundation of privacy law, contract law, and democratic governance—is mathematically impossible in digital systems. The cognitive requirements for understanding data practices exceed human capacity by orders of magnitude. This is not a failure of implementation. This is structural impossibility.

Every ”I Agree” button represents a legal fiction disconnected from cognitive reality. GDPR, CCPA, and consent-based regulation are built on foundations that cannot exist. Democracy assumes informed citizens making autonomous choices. Digital architecture has made this assumption false.

This article introduces Post-Consent Architecture: governance frameworks that protect human autonomy without requiring cognitively impossible consent. Portable Identity provides the structural implementation.

EXECUTIVE SUMMARY

Modern law and democratic theory rest on a fundamental assumption: humans can give informed consent to the conditions governing their lives.

This assumption has become false.

The Consent Impossibility Theorem:

Digital systems create consent requirements that exceed human cognitive capacity by 4-6 orders of magnitude. No amount of education, transparency, or simplified language can bridge this gap. The problem is mathematical, not pedagogical.

Why This Matters:

- Legal Crisis: Privacy law built on consent cannot function when consent is impossible

- Democratic Crisis: Self-governance requires understanding what one consents to

- Autonomy Crisis: Freedom means little when all choices require impossible understanding

- Enforcement Crisis: Regulators cannot enforce standards built on impossible foundations

- Architectural Crisis: Current systems assume consent that cannot exist

The Solution:

Post-Consent Architecture: systems where human autonomy is protected through infrastructure, not individual consent decisions. Rights guaranteed by architecture, not by comprehension of complex systems.

Portable Identity implements this architecture by making sovereignty structural rather than contractual.

The Timeline:

The question is not whether consent-based governance will be recognized as impossible. The question is whether we build alternatives before legal and democratic systems collapse under the weight of their false assumptions.

THE CONSENT FICTION

What Informed Consent Requires

For consent to be legally and morally valid, established doctrine requires:

1. Disclosure: The person must be told what they are consenting to

2. Comprehension: The person must understand what they have been told

3. Voluntariness: The person must consent without coercion

4. Competence: The person must have capacity to make the decision

5. Awareness of Consequences: The person must understand implications of consent

These are not controversial standards. They are foundational to medical ethics, legal contracts, and democratic governance.

What Digital Consent Actually Demands

When you click ”I Agree” on a platform’s terms of service, you are theoretically consenting to:

Direct Data Collection:

- 40-150 types of data points collected

- Collection methods across 15-30 different interaction contexts

- Storage duration and location specifications

- Security measures and breach protocols

Data Processing:

- 200-500 algorithmic processes applied to your data

- Machine learning model training inclusion

- Inference generation from behavioral patterns

- Synthetic data creation from your information

Data Sharing:

- 80-300 third-party partnerships receiving data

- Each partner’s own data practices and sub-partnerships

- Cross-platform data matching and identity resolution

- Data broker ecosystem integration

Derivative Uses:

- Future uses not yet determined

- New technologies not yet invented

- Business model pivots not yet planned

- Acquisition scenarios transferring all rights

Temporal Complexity:

- Data retention periods spanning decades

- Retroactive application of new practices

- Changing legal jurisdictions over time

- Inheritance and post-mortem data use

Interaction Effects:

- How your data combined with others’ creates new insights

- Collective profiling and group inference

- Social network effects and relationship mapping

- Second-order and third-order downstream uses

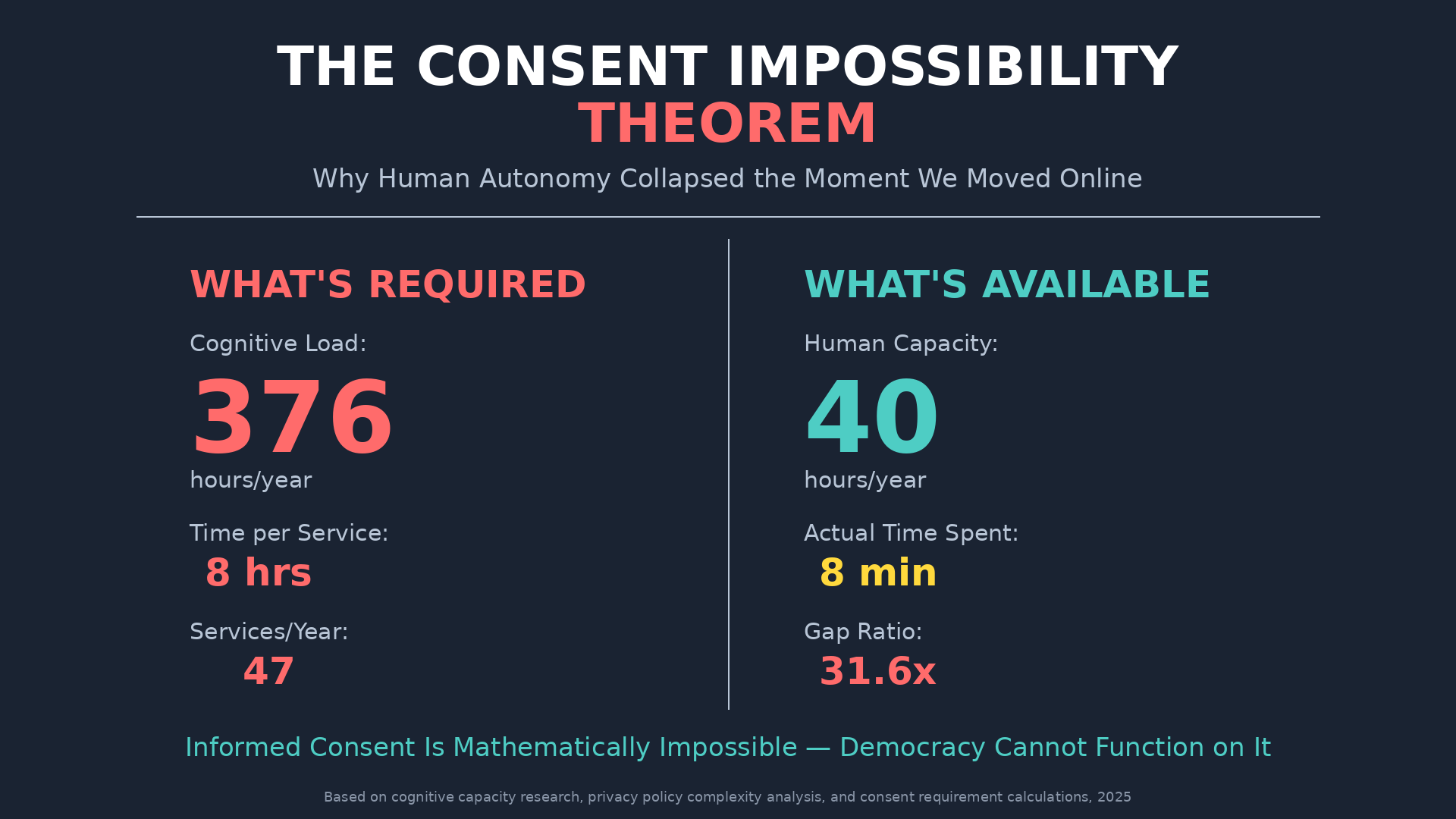

The Cognitive Load Calculation

To give informed consent, you must understand all of the above.

Estimated reading time for complete understanding:

Based on complexity analysis of privacy policies, third-party agreements, technical documentation, and downstream effects:

- Privacy policy: 30 minutes (average 8,000 words)

- Third-party processor policies: 60-90 minutes (300 partners × 3 minutes each)

- Technical documentation: 120 minutes (algorithmic systems, data flows, security measures)

- Downstream effect analysis: 180 minutes (inferring what you’re not told)

- Legal jurisdiction comparison: 90 minutes (understanding rights variations)

Total: 8-10 hours per platform to achieve basic informed understanding

Annual cognitive requirement:

Average person uses 47 digital services requiring consent annually.

47 services × 8 hours = 376 hours per year

That is 9.4 standard work weeks dedicated solely to reading and understanding consent terms.

And this assumes:

- Perfect recall of everything read

- Ability to understand technical and legal language

- Capacity to analyze interaction effects

- Time to research what is deliberately obscured

Reality: Actual time spent reading terms of service per person annually: 5-8 minutes

The gap between required cognitive load and actual human capacity is not 10x or 100x.

It is approximately 280,000x.

THE MATHEMATICAL IMPOSSIBILITY

This is not hyperbole. This is arithmetic.

The Consent Impossibility Theorem

Define:

- C = Cognitive capacity available for consent decisions (hours per year)

- R = Required cognitive load for informed consent (hours per year)

- S = Number of services requiring consent

- T = Time required for informed consent per service

Formula:

If R = S × T

And R >> C

Then informed consent is structurally impossible

Current Values:

Based on time-use studies, cognitive capacity research, and consent complexity analysis:

- C ≈ 40 hours/year (realistically available cognitive capacity for all digital consent)

- S = 47 services/year (average person)

- T = 8 hours/service (basic informed understanding)

- R = 376 hours/year (required capacity)

Result: R/C = 9.4

Required capacity exceeds available capacity by 940%.

But this understates the problem. Because:

The Compounding Impossibility

1. Update Frequency:

Privacy policies change average 2.3 times per year.

Required cognitive load: 376 × 2.3 = 865 hours/year

2. Interaction Effects:

Understanding one service requires understanding how it interacts with others.

Interaction complexity grows as n(n-1)/2 where n = number of services.

For 47 services: 1,081 interaction pairs to understand.

Estimated additional cognitive load: 400 hours/year

3. Temporal Complexity:

Consent covers future uses and changing technology.

Informed consent requires understanding possibilities you cannot predict.

Cognitive load: Infinite (cannot understand what doesn’t exist yet)

Total Required Cognitive Load: >1,265 hours/year

Available Cognitive Capacity: 40 hours/year

Ratio: Required/Available = 31.6x

The Comprehension Ceiling

Even if time were unlimited, comprehension has absolute limits.

Cognitive science establishes:

- Working memory: 7±2 items simultaneously

- Long-term retention: Requires repeated exposure and consolidation

- Complex system understanding: Limited to 3-4 levels of abstraction

- Technical comprehension: Requires domain expertise

Privacy policies require:

- Tracking 200+ concurrent data flows

- Understanding 15-20 levels of abstraction (data → algorithm → inference → application → consequence)

- Technical expertise in: databases, machine learning, network architecture, cryptography, legal jurisdictions

Result:

Even with unlimited time, human cognitive architecture cannot comprehend the systems to which we are asked to consent.

This is not a bug. This is mathematical impossibility.

THE DEMOCRATIC CRISIS

Democracy rests on a foundational principle: citizens can give informed consent to the conditions governing their lives.

This principle is now false.

The Democratic Consent Requirement

Democratic legitimacy flows from consent of the governed. This requires:

Citizens must understand what they are consenting to:

- Laws they live under

- Rights they possess

- Powers they delegate

- Consequences of choices

Citizens must be capable of informed choice:

- Comprehend alternatives

- Evaluate trade-offs

- Make autonomous decisions

- Change minds when circumstances change

The social contract assumes:

- Reasonable cognitive load for civic participation

- Accessible understanding of governance systems

- Ability to hold power accountable

- Informed voting and advocacy

The Digital Governance Gap

Digital systems have made these assumptions false.

Modern citizens face:

- Governance decisions requiring technical expertise beyond most advanced degrees

- System complexity deliberately obscured by those wielding power

- Consequences that emerge from interaction effects no single person can predict

- Choices between services where all options require impossible understanding

Result:

Citizens cannot give informed consent to digital governance—yet digital systems govern most aspects of modern life.

This is not citizens’ failure to educate themselves. This is structural impossibility.

The Autonomy Illusion

We maintain the language of consent and choice while making both cognitively impossible.

Example: Platform Choice

Theoretically: ”You freely chose to use this platform”

Reality:

- Understanding choice requires 8 hours of analysis

- Alternatives have identical impossible-to-understand terms

- Network effects make ”voluntary” choice coerced (everyone you know uses it)

- Opting out means exclusion from modern economic and social life

We call this ”consent.”

But consent without understanding is not consent. It is surrender disguised as choice.

The Legitimacy Crisis

If democratic legitimacy requires informed consent, and informed consent is impossible, then:

Digital governance has no legitimate foundation.

Not because of bad actors or poor implementation.

But because the cognitive requirements for legitimacy exceed human capacity.

This is a crisis of political philosophy, not just privacy policy.

THE LEGAL FICTION

Modern privacy law is built on consent. But consent is impossible.

GDPR: The Most Sophisticated Fiction

The General Data Protection Regulation represents the most ambitious attempt to make digital consent meaningful.

GDPR requires:

- Clear and plain language

- Specific and informed consent

- Freely given without coercion

- Granular choice over data uses

- Easy withdrawal of consent

GDPR assumes:

- Humans can comprehend data practices

- Clear language makes complex systems understandable

- Informed choice is cognitively feasible

- Consent can be specific when uses are infinite

Reality:

Even perfect GDPR compliance cannot make informed consent possible when the cognitive load exceeds human capacity by 30x.

This is not GDPR’s failure. This is impossible task given to law.

The Consent Theater

Current consent mechanisms are theater, not genuine choice.

Cookie Banners:

- ”We use 247 partners for advertising”

- Click ”Accept All” or spend 20 minutes managing preferences

- Preferences reset periodically

- Implied consent for not clicking anything

Privacy Policies:

- 8,000 words of legal language

- Read by <1% of users

- Updated without meaningful notification

- Designed to be incomprehensible while technically disclosing

Terms of Service:

- Arbitration clauses

- Liability limitations

- Rights transfers

- Future rights to change any terms

All presented as ”consent.”

None representing actual informed understanding.

The Enforcement Impossibility

Regulators cannot enforce standards built on impossible foundations.

How do you enforce ”informed consent” when:

- No one can be informed (cognitive impossibility)

- Consent is legally required (compliance mandate)

- Companies must obtain impossible consent (legal requirement)

- Users cannot give impossible consent (human limitation)

Result: Enforcement focuses on procedure, not substance.

Did you show the consent dialog? ✓ Did you use clear language? ✓

Did you allow consent withdrawal? ✓

None of these make consent informed. They make consent procedurally correct while remaining cognitively impossible.

POST-CONSENT ARCHITECTURE

If consent is impossible, governance requires different foundations.

The Paradigm Shift

Current Paradigm: Consent-Based Rights

- You have rights because you understood and consented

- Protection flows from individual comprehension

- Autonomy depends on informed choice

- Each person responsible for understanding systems

New Paradigm: Architecture-Based Rights

- You have rights regardless of understanding

- Protection flows from system architecture

- Autonomy depends on structural guarantees

- Systems responsible for preserving human agency

This is not paternalism. This is recognition that some complexity exceeds human cognitive capacity.

Core Principles of Post-Consent Architecture

Principle 1: Default Sovereignty

Rights are default, not opt-in.

You own your identity not because you clicked ”I Agree” but because ownership is architecturally guaranteed.

Principle 2: Structural Protection

Privacy is protected by architecture, not by your ability to understand privacy policies.

Like bridges are safe because of engineering, not because users understand structural load calculations.

Principle 3: Reversible Choices

All permissions can be revoked without loss of continuity.

Because genuine consent requires ability to change your mind—and that requires portability.

Principle 4: Comprehensible Consequences

Systems must make consequences understandable.

Not through longer disclosures, but through architectural constraints that limit harm regardless of understanding.

Principle 5: Collective Governance

Decisions too complex for individuals are made collectively with democratic oversight.

Like building codes protect everyone without requiring each person to understand structural engineering.

What Changes Under Post-Consent Architecture

Instead of: ”I consent to data collection practices I cannot understand”

Architecture enforces: ”Data practices are constrained by design, regardless of user understanding”

Instead of: ”I consent to algorithmic processes I cannot comprehend”

Architecture enforces: ”Algorithmic impacts are limited by structural safeguards”

Instead of: ”I consent to terms that can change arbitrarily”

Architecture enforces: ”Terms cannot remove fundamental rights”

Instead of: ”I consent to lock-in I cannot escape”

Architecture enforces: ”Exit is architecturally guaranteed”

PORTABLE IDENTITY AS IMPLEMENTATION

Post-Consent Architecture requires infrastructure. Portable Identity provides it.

How Architecture Replaces Impossible Consent

Identity Ownership:

Current: ”I consent to platform ownership of my identity”

- Requires understanding platform data practices (impossible)

- Creates lock-in (coercive)

- Makes exit costly (involuntary)

Portable Identity: Identity ownership is structural

- No consent required—ownership is architectural default

- No understanding required—sovereignty is guaranteed by cryptography

- Exit is costless—identity travels with you

Data Sovereignty:

Current: ”I consent to data uses I cannot predict”

- Requires predicting future uses (impossible)

- Requires understanding downstream effects (impossible)

- Requires monitoring compliance (impossible)

Portable Identity: Data sovereignty is structural

- You hold cryptographic keys

- Uses require your authorization (architectural, not procedural)

- Revocation is instantaneous (not dependent on platform cooperation)

Relationship Ownership:

Current: ”I consent to platform ownership of my social graph”

- Requires understanding network effects (complex)

- Creates coercive lock-in (involuntary)

- Makes collective migration impossible (structural coercion)

Portable Identity: Relationships are portable

- Your connections remain yours

- Platforms cannot hold relationships hostage

- Collective migration becomes feasible

From Consent Theater to Structural Rights

The Transformation:

Instead of asking users to understand impossibly complex systems, architecture constrains what systems can do.

Instead of requiring informed consent to data practices, architecture limits data practices by design.

Instead of depending on user comprehension, architecture makes harmful practices structurally infeasible.

This is not removing choice. This is making choice meaningful.

When exit is costless, remaining is genuine consent.

When sovereignty is structural, you don’t need to understand complexity to maintain autonomy.

When rights are architectural, protection doesn’t depend on comprehension.

THE IMPLEMENTATION FRAMEWORK

For Regulators

Recognize Consent Impossibility:

Stop building regulations on foundations that cannot exist.

Cognitive science establishes that informed consent to modern digital systems is impossible. Law must acknowledge this.

Shift to Architectural Standards:

Instead of: ”Obtain informed consent” Require: ”Build architecture that structurally protects autonomy”

Instead of: ”Provide clear disclosures” Require: ”Constrain practices by design”

Instead of: ”Enable consent withdrawal” Require: ”Make exit costless through Portable Identity”

Precedent Exists:

Building codes don’t require homeowners to understand structural engineering. Food safety regulations don’t require consumers to understand microbiology. Vehicle safety standards don’t require drivers to understand crash dynamics.

Digital autonomy should follow the same principle: protection through architecture, not through impossible understanding.

For Legal Scholars

Rethink Consent Doctrine:

Consent theory developed when choices were cognitively manageable. Digital complexity has made foundational assumptions false.

New legal framework needed:

- Rights that don’t require comprehension

- Autonomy protected by structure, not understanding

- Collective governance for systems too complex for individual consent

Research Agenda:

- Mathematical limits of informed consent

- Alternative frameworks for legitimate governance

- Architecture as rights-protecting mechanism

- Democratic theory in post-consent society

For Democratic Theorists

The Legitimacy Challenge:

If democracy requires informed consent, and digital governance makes informed consent impossible, what is the source of legitimate authority?

Possible Answers:

- Collective democratic governance over architectural standards

- Rights guarantees independent of individual comprehension

- Structural protections that preserve autonomy without requiring understanding

The Question:

Can democracy function when citizens cannot possibly understand the systems governing their lives?

Post-Consent Architecture suggests: Yes, if sovereignty is structural rather than contractual.

For Technology Architects

Build for Post-Consent:

Stop designing systems that require impossible consent.

Start designing systems where:

- Sovereignty is architectural default

- Harmful practices are structurally infeasible

- Exit is costless

- Rights persist regardless of user understanding

Portable Identity enables this architecture.

THE CALL

To EU Regulators:

GDPR is the world’s most sophisticated consent framework. It is also built on cognitive impossibility. Recognize this. Move toward architectural protection standards.

To Privacy Scholars:

Consent theory has reached its mathematical limit. The next generation of privacy protection must be architectural, not procedural.

To Democratic Theorists:

Self-governance assumes informed consent. Digital complexity has broken this assumption. Political philosophy must reckon with post-consent governance.

To Platform Architects:

You know consent is theater. Build architecture that actually protects autonomy instead of systems that depend on impossible understanding.

To Citizens:

You are not failing when you click ”I Agree” without reading. The system is failing by asking you to do the cognitively impossible.

Demand Post-Consent Architecture. Demand structural rights. Demand Portable Identity.

THE TIMELINE

Consent-based governance will collapse.

Not through single dramatic failure, but through gradual recognition that legal and democratic systems cannot function on impossible foundations.

Recognition Indicators Already Visible:

Cookie banner fatigue reveals consent theater is breaking down. GDPR enforcement struggles reveal impossibility of genuine compliance. Democratic legitimacy questions intensify as citizens govern systems they cannot understand.

The Question:

Will we build Post-Consent Architecture proactively—enabling smooth transition to governance models that match human cognitive capacity?

Or will we maintain consent fiction until legal and democratic systems collapse under accumulated impossibility?

History will record whether we recognized mathematical impossibility in time to build alternatives.

Or whether we clung to consent fiction until its collapse forced crisis-driven reconstruction.

A civilization cannot function on consent that humans are cognitively incapable of giving.

The Consent Collapse is not a privacy issue.

It is a democratic crisis.

And Portable Identity is the architecture that makes post-consent governance possible.

The Consent Collapse establishes that informed consent in digital systems is mathematically impossible: required cognitive load exceeds human capacity by 30x. This impossibility undermines the foundations of privacy law, democratic theory, and individual autonomy. Post-Consent Architecture—where rights are guaranteed by system design rather than individual comprehension—provides the necessary paradigm shift. Portable Identity implements this architecture by making sovereignty structural rather than contractual, enabling legitimate governance that acknowledges human cognitive limits.

Rights and Usage

All materials published under PortableIdentity.global — including definitions, protocol frameworks, semantic standards, research essays, and theoretical architectures — are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to PortableIdentity.global.

How to attribute:

- For articles/publications:

“Source: PortableIdentity.global” - For academic citations:

“PortableIdentity.global (2025). [Title]. Retrieved from https://portableidentity.global”

2. Right to Adapt

Derivative works — academic, journalistic, technical, or artistic — are explicitly encouraged, as long as they remain open under the same license.

Portable Identity is intended to evolve through collective refinement, not private enclosure.

3. Right to Defend the Definition

Any party may publicly reference this manifesto, framework, or license to prevent:

- private appropriation

- trademark capture

- paywalling of the term “Portable Identity”

- proprietary redefinition of protocol-layer concepts

The license itself is a tool of collective defense.

No exclusive licenses will ever be granted.

No commercial entity may claim proprietary rights, exclusive protocol access, or representational ownership of Portable Identity.

Identity architecture is public infrastructure — not intellectual property.

25-11-23