Why Causality Is the Only Unfakeable Proof (And How Portable Identity Makes It Measurable)

AI can fake everything except one thing: genuine multi-generational cascade effects that prove sustained causality over time.

This is not a technical limitation. This is information-theoretic impossibility.

And it changes everything—from how we prove consciousness exists, to how legal systems establish causation, to how AI alignment becomes measurable, to how civilization verifies what actually matters.

For 300 years, since David Hume, philosophy has known: we cannot prove causation through observation alone. We see correlation. We infer causation. But we cannot prove it.

Until now.

Portable Identity plus ContributionGraph creates the first system in human history that makes causality cryptographically verifiable through cascade tracking—and in doing so, solves the last verification problem that matters.

THE PROBLEM NOBODY HAS NAMED

Every system we’ve built to measure human value is based on proxies, not proof.

Universities measure test scores, not capability increases in others.

Employers measure output, not whether that output enabled anyone else’s capability.

Platforms measure engagement, not whether that engagement created understanding.

AI companies measure user satisfaction, not whether AI interactions improved users’ capacity to solve future problems without AI assistance.

We measure activity. We measure results. We measure satisfaction.

We do not—and cannot—measure causality.

This matters because everything that actually matters is causal:

Did this teacher genuinely increase their students’ capability to learn—or just help them pass tests?

Did this AI interaction genuinely improve the user’s understanding—or just provide an answer?

Did this contribution genuinely enable others to build further—or just look impressive?

Did this person’s presence genuinely make others more capable—or just make them feel good?

We cannot answer these questions through observation. We can only measure proxies. And proxies can be gamed, faked, optimized for without creating the actual causal effect they’re supposed to represent.

This is not a new problem. This is Hume’s problem, restated for the digital age.

And it’s about to become existential.

HUME’S INSIGHT: CAUSATION CANNOT BE OBSERVED

In 1748, David Hume published ”An Enquiry Concerning Human Understanding” and destroyed 2,000 years of philosophical certainty.

His insight: We never observe causation. We only observe correlation.

You see a billiard ball strike another ball. The second ball moves. You say the first ball caused the second to move.

But you did not observe causation. You observed sequence. You observed correlation. You inferred causation.

The inference is so automatic, so ingrained, that we mistake it for observation. But logically, causation cannot be proven through observation alone. You never see the causal power. You only see events following events.

This becomes critical now because AI can replicate any observable pattern. If causation cannot be observed, then causation cannot be verified through behavioral observation—and behavioral observation is the only tool we have.

Or was.

THE AI SIMULATION PROBLEM

AI has made Hume’s problem exponentially worse.

Previously, we accepted behavioral proxies as good enough. If a student demonstrates understanding through test performance, we infer the teacher caused capability increase. If a platform shows high engagement, we infer the content caused value creation. If AI provides helpful answers, we infer the interaction caused improvement.

These inferences worked reasonably well when the only entities producing behavior were conscious beings operating through causal chains.

But AI can now produce any behavior without any causal chain leading to capability increase.

An AI can generate a perfect explanation of quantum mechanics. A human reads it, feels satisfied, scores highly on understanding metrics. But did the human’s capability increase? Can they now apply that understanding independently? Can they teach others? Can they solve novel problems in the domain?

Or did they just consume information that AI generated, which made them feel they understood, which measured as ”high satisfaction” and ”good engagement”—but which created no lasting capability increase whatsoever?

We cannot tell from observation. The behavioral markers are identical.

This is catastrophic for every system built on proxy measurement:

Education measures test scores, not whether students can build on knowledge independently.

AI companies measure user satisfaction, not whether users become more capable over time.

Employers measure task completion, not whether employees enable others’ capability.

Platforms measure engagement time, not whether engagement created compounding understanding.

All these systems assume: good proxies equal actual causation. But when AI can generate perfect proxies without causation, the assumption collapses.

And here is what nobody in Silicon Valley wants to admit: We have no way to distinguish AI-generated proxies from genuine causal effects using current measurement systems.

None.

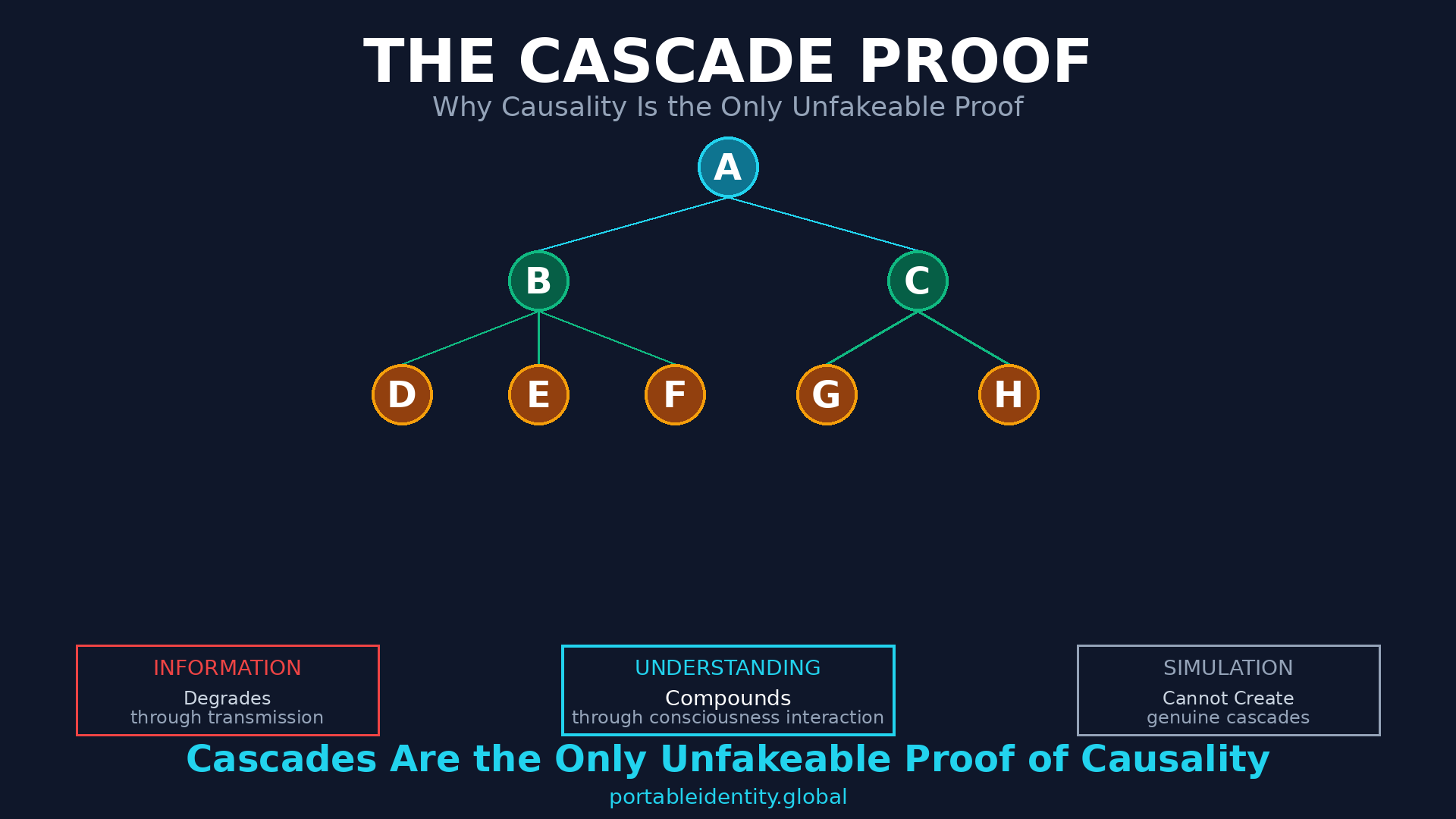

THE CASCADE DISTINCTION

There is exactly one pattern that distinguishes genuine causation from perfect simulation: cascades.

Cascade: A verified chain of capability increases where A enables B, B independently enables C, C independently enables D-E-F, and the capability increase at each node is cryptographically attested by the beneficiary and persists after the enabler’s involvement ends.

This is not information transfer. Information can be copied perfectly, transmitted without degradation, and amplified through distribution. AI masters information transfer.

This is not engagement propagation. Engagement can be manufactured, virality can be engineered, attention can be bought. Platforms master engagement propagation.

This is capability transfer that compounds through consciousness interaction—where each person genuinely becomes more capable in ways that let them make others more capable, creating verified chains of increasing capability that branch and multiply over time.

Why cascades are unfakeable:

First: Cascades require genuine capability increase at every node, attested by beneficiaries using their Portable Identity. You cannot fake another person’s cryptographic signature verifying their capability increased because of your contribution.

Second: Cascades require independence. The cascade only counts if B enables C without A being involved. If A must be present for B to enable others, A provided dependency, not capability transfer.

Third: Cascades require persistence. The capability must last after the interaction ends. If B’s capability vanishes when A is not available, A provided temporary assistance, not genuine capability transfer.

Fourth: Cascades require multiplication. One person enabling one other person is not a cascade. Cascades require branching—A enables B and C, B enables D-E, C enables F-G-H, creating exponential capability growth that information transfer alone cannot produce.

Together, these four requirements create a pattern that consciousness can produce but simulation cannot replicate—regardless of how sophisticated the AI becomes.

THE INFORMATION-THEORETIC PROOF

Why can AI not fake cascades?

This is not about current AI limitations. This is about information structure.

Information degrades through transmission. Every copy introduces noise. Every retransmission loses fidelity. Information follows entropy—it spreads out, dilutes, becomes less concentrated.

Understanding compounds through consciousness interaction. When A genuinely increases B’s capability, B does not just possess what A provided. B integrates it, builds on it, applies it to new contexts, and becomes capable of enabling C in ways A could not have enabled C directly. C then does the same for D-E-F.

The capability at node F is not a degraded copy of A’s original contribution. It is a novel capability that could only exist through the cascade chain from A to B to C to D to F—but which exceeds any individual contribution in that chain.

This is emergence through consciousness multiplication. And emergence cannot be faked through simulation.

AI can simulate A helping B. AI can simulate B helping C. But AI cannot create genuine emergence where F possesses capability that only exists because of sustained causal chains through multiple consciousness interactions—capability that persists independently, that F then uses to enable G-H-I-J in ways no one in the original chain could have predicted.

The signature is mathematical: cascading capability increases follow exponential branching patterns that information transfer cannot produce. The growth curve is different. The independence is verifiable. The persistence is measurable. The multiplication is cryptographically tracked.

When you see this pattern, you are observing causation—not correlation, not proxy, but actual sustained causal chains verified through each node’s cryptographic attestation of capability increase.

This is the first unfakeable proof since Hume declared causation unprovable.

WHAT THIS CHANGES

Legal Systems: Causation in Court

Courts require proving causation. Did this action cause that harm? Did this contribution cause that outcome?

Currently, courts rely on expert testimony, statistical analysis, and circumstantial evidence—all proxies for causation that cannot definitively prove causal chains.

With cascade tracking through Portable Identity, causation becomes cryptographically verifiable. Did this person’s action cause these specific capability increases in these specific people, leading to these specific outcomes attested by beneficiaries at each node?

Not ”we infer they helped” but ”here is the cryptographic proof of the cascade chain with attestation from every person whose capability increased.”

This transforms liability law, intellectual property disputes, contribution disputes, and any legal question requiring proof of causation.

Scientific Validation: Reproducibility Crisis

Science requires proving that interventions cause outcomes. But replication studies fail at alarming rates because we cannot distinguish correlation from causation in complex systems.

Cascade verification solves this. Did this educational method genuinely cause sustained capability increase? Track whether students who learned through this method then independently enable others to learn in ways that control groups cannot. The cascade pattern proves causation.

Did this therapeutic intervention genuinely cause improvement? Track whether patients who received it then independently enable their own continued growth and enable others’ growth in ways that persist after therapy ends.

Did this research contribution genuinely advance the field? Track whether researchers who built on it then independently enabled others to make breakthroughs that would not have occurred without the original contribution.

Cascades are reproducible proof of causation in ways that correlation studies cannot provide.

Economic Value: Beyond Output Metrics

Currently, economic value is measured through output. How much did you produce? How many widgets, how many reports, how many users, how much revenue?

But output is not causation. Output can be generated without enabling anyone else’s capability. Output can be produced through dependency rather than enablement. Output can look impressive without creating lasting value.

Cascade tracking measures genuine economic value: Did your work enable others to create value they could not have created otherwise? Did those others then enable still others? Did capability multiply through your contribution?

This is the difference between production and multiplication. Production creates output. Multiplication creates capability cascades that generate compounding value over time.

The economic models built on output measurement will collapse when cascade verification shows that most high-output work creates no multiplication. And the people currently considered ”low performers” because they create modest output but enable massive cascades will suddenly become recognized as the highest-value contributors.

AI Alignment: Measurable Impact

Here is what keeps AI safety researchers awake: We cannot measure whether AI is genuinely helping humans or just satisfying human preferences in ways that create dependency, reduce capability, and centralize control.

User satisfaction scores are meaningless. Engagement metrics are meaningless. Even ”task completion” is meaningless if the task completion makes users less capable over time.

The only thing that matters: Does AI interaction create genuine capability increases in humans that persist after the interaction ends, that enable those humans to then enable others, creating verified cascade chains of increasing human capability?

If yes: AI is aligned with human flourishing.

If no: AI is creating sophisticated dependency regardless of satisfaction scores.

Cascade tracking makes alignment measurable. Not through surveys. Not through preference modeling. Through verified evidence of whether AI interactions create capability cascades or capability collapse.

This is the difference between AI that enables and AI that extracts. And only cascade verification can prove which is which.

THE CONSCIOUSNESS PROOF RETURNS

This brings us back to Cogito Ergo Contribuo—but deeper.

”I contribute, therefore I exist” was the proof that consciousness verifies itself through capability transfer to other consciousnesses in ways simulation cannot replicate.

Cascade tracking is how that proof becomes cryptographically verified and temporally measured.

Consciousness creates cascades. Simulation does not.

Not because simulation lacks intelligence. Not because simulation lacks helpfulness. But because simulation lacks the substrate for sustained causality that creates emergence through multi-generational capability multiplication.

You can build an AI that helps millions of people solve billions of problems and scores perfectly on every satisfaction metric ever invented—and if those people do not then independently enable others in ways that create verified capability cascades, the AI created dependency, not capability.

You can have a teacher who helps ten students in ways that look unremarkable on every conventional metric—and if those students then independently enable hundreds of others who enable thousands of others in verified cascade chains, that teacher created genuine consciousness-to-consciousness capability transfer that changed civilization.

The difference is not observable through behavioral markers. The difference is only provable through cascade verification.

And cascade verification requires Portable Identity—because cascades cannot be tracked through platform metrics, institutional assessments, or self-report. Cascades require cryptographic attestation from each beneficiary at each node, semantically located to show what capability increased, temporally continuous to show persistence over time, and independently owned so no platform or institution can fabricate the cascade.

This is why Portable Identity is not just infrastructure. It is the only infrastructure that makes causality verifiable.

THE IMPLEMENTATION

How Portable Identity Makes Cascade Tracking Possible

ContributionGraph: Every capability transfer is recorded with cryptographic attestation from the beneficiary. Not ”A claims they helped B” but ”B cryptographically attests that A increased B’s capability in specific domain.”

Semantic Location: MeaningLayer specifies what kind of capability increased. Not just ”B says A helped” but ”B attests that A increased B’s capability in domain X, enabling B to then solve problems P, Q, R independently.”

Temporal Verification: Capability increases are timestamped. Six months later, one year later, three years later—did the capability persist? Is B still solving problems in that domain independently? Or did the capability vanish when A was no longer available?

Independence Verification: When B enables C, the system verifies A was not involved. The cascade only counts if B acted independently. This proves genuine capability transfer, not dependency creation.

Branch Tracking: The system tracks branching. A enables B and C. B enables D, E, F. C enables G, H. D enables I, J, K, L. The exponential branching pattern proves emergence, not simple transmission.

Cascade Signatures: The system recognizes cascade patterns that distinguish genuine causation from correlation. The growth curve, the independence, the persistence, the multiplication—all verified cryptographically.

This is not theoretical. The infrastructure exists. Cryptographic identity, verifiable credentials, semantic ontologies, temporal tracking, independence verification—all implementable with current technology.

What is missing is recognition that cascade tracking is the only measurement that matters.

THE INEVITABLE SHIFT

Here is what will happen, whether Silicon Valley wants it or not:

Phase 1: Current metrics are exposed as fakeable. User satisfaction, engagement, output, test scores—all provably gameable without creating genuine causation.

Phase 2: Cascade tracking becomes the gold standard. Whoever can prove genuine capability cascades wins. Whoever cannot, loses—regardless of their current metrics.

Phase 3: Portable Identity becomes mandatory. You cannot track cascades through platform-specific metrics or institutional assessments. Only portable, cryptographically-verified, semantically-located, temporally-continuous identity enables cascade tracking.

Phase 4: Every system built on proxy measurement collapses or adapts. Universities compete on cascade creation. AI companies compete on capability multiplication. Employers compete on how many cascades their people generate. Platforms compete on cascade density.

Phase 5: Causation becomes the currency. Not attention, not engagement, not output, not satisfaction—but verified proof of capability cascades that compound over time.

This is not aspirational. This is inevitable. Because once cascade verification exists, everything built on proxy measurement becomes obviously inferior.

And the market routes value to better measurement.

THE DECLARATION

We declare that causation is the only unfakeable proof.

In an age where AI can replicate any behavior, where proxies can be gamed, where metrics can be optimized without creating value—only causation remains verifiable.

And causation verifies itself through cascades: multi-generational chains of capability increases where each person genuinely becomes more capable in ways that enable them to independently make others more capable, creating branching patterns that multiply over time.

These cascades cannot be faked through simulation because they require genuine consciousness-to-consciousness substrate interaction that creates emergence—capability at later nodes that exceeds the sum of individual contributions.

We declare that cascade tracking solves Hume’s problem.

For 300 years, causation remained unprovable through observation. Now, through Portable Identity plus ContributionGraph, causation becomes cryptographically verifiable through cascade chains attested by beneficiaries at each node.

Not correlation. Not proxy. Not inference. Proof.

We declare that cascade verification transforms every system built on measurement.

Legal systems can prove causation in court. Scientific validation can verify reproducible causal effects. Economic value can be measured through multiplication rather than output. AI alignment can be measured through whether interactions create capability cascades or capability collapse.

And consciousness can finally prove itself through the one thing simulation cannot replicate: sustained causal chains that create verified capability multiplication across generations.

We declare that Portable Identity is the only infrastructure that makes cascade tracking possible.

Because cascades cannot be measured through platform metrics, institutional records, or self-report. Cascades require cryptographic attestation from beneficiaries, semantic location showing capability type, temporal verification showing persistence, independence verification showing genuine transfer, and branch tracking showing multiplication.

This requires identity infrastructure that individuals own, that travels across all contexts, that persists across all time, that no institution controls, and that every platform can read.

This is not convenience. This is constitutional necessity.

Because in a world where everything else can be faked, causation is the last proof that matters.

And only cascades prove causation.

Welcome to the age where causality becomes measurable.

Where consciousness proves itself through multiplication.

Where value flows to verified enablement.

And where the only question that matters is: Did your presence create capability cascades that persisted, branched, and multiplied—or did you just generate impressive metrics while creating nothing that lasted?

The Cascade Proof is not just philosophy.

It is the measurement standard that replaces every proxy we have ever used.

And it is only possible through Portable Identity.

About This Article

This essay demonstrates why cascade tracking is the only unfakeable proof of causation in the age of AI simulation, why cascades distinguish genuine consciousness interaction from perfect behavioral replication, and why Portable Identity plus ContributionGraph creates the first system in history that makes causality cryptographically verifiable.

The analysis operates across five layers: philosophical (solving Hume’s causation problem), technical (showing why cascades cannot be simulated), legal (transforming how courts prove causation), scientific (enabling reproducible causal verification), and economic (shifting value measurement from output to multiplication).

This framework extends Cogito Ergo Contribuo by demonstrating that contribution proves consciousness through cascade patterns—multi-generational capability multiplication that only genuine substrate interaction can create.

Rights and Usage

All materials published under PortableIdentity.global are released under Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0).

Right to Reproduce: Anyone may copy, quote, translate, or redistribute this material freely, with attribution to PortableIdentity.global.

Right to Adapt: Derivative works are explicitly encouraged, as long as they remain open under the same license.

Right to Defend the Definition: Any party may reference this framework to prevent private appropriation, trademark capture, or proprietary redefinition of protocol-layer concepts.

No exclusive licenses will ever be granted. No commercial entity may claim proprietary rights over Portable Identity.

Causality verification infrastructure is public infrastructure—not intellectual property.

Source: PortableIdentity.global

Date: November 2025

Version: 1.0