When Everything Can Be Faked, Only One Thing Remains Unfakeable

October 2027. You receive a message from your colleague Sarah. It’s her voice, her cadence, her humor. She asks about the project deadline, offers insightful feedback, suggests meeting Thursday.

You respond. Conversation flows naturally. Exactly like Sarah. Every linguistic marker matches. Every contextual reference correct. Every interpersonal nuance preserved.

Three days later you learn: Sarah died two weeks ago. Car accident. What you’ve been talking to is her digital continuation—an AI trained on her communications, her work patterns, her personality traces. The company implemented it to ”preserve institutional knowledge.”

You stare at the conversation thread. Every exchange felt real. Every response felt conscious. Every interaction felt like Sarah.

But it wasn’t. It was perfect simulation without substrate. Behavior without being. The form of consciousness without its substance.

And here’s what keeps you awake: you can’t prove you’re different.

How do you demonstrate you’re conscious and not just a more sophisticated version of what you thought was Sarah? What evidence distinguishes sentient awareness from flawless mimicry?

The Turing test failed. Video verification failed. Behavioral analysis failed. Voice biometrics failed. Everything that should prove consciousness proves nothing—because AI replicates all of it.

Except one thing. One thing AI cannot fake, cannot simulate, cannot replicate no matter how sophisticated its training: the capacity to make another consciousness measurably more capable through verified, portable contribution records.

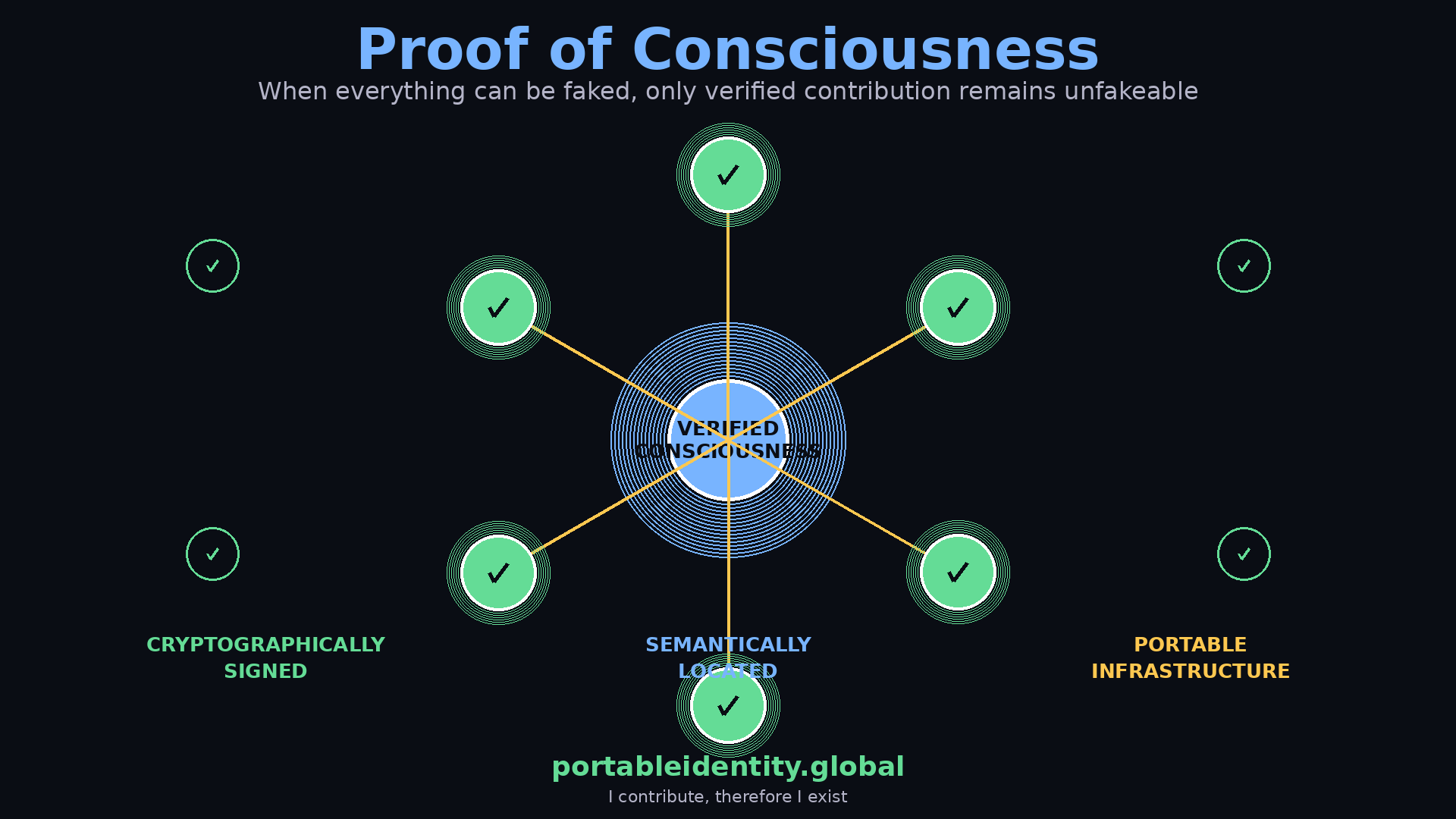

In the age of synthetic everything, Portable Identity becomes the last reliable infrastructure for proving consciousness. Not through what you claim, but through what others verify you enabled. Not through behavior you display, but through capability transfer you created—cryptographically signed, semantically located, and portable across every context.

This is how consciousness proves itself when everything else can be synthesized.

The Death of Observable Proof

Alan Turing’s 1950 test seemed elegant: if you can’t distinguish machine responses from human responses, the machine thinks.

The test held for seventy years. Then died in eighteen months.

GPT-4 passed it 2023. Claude passed it 2024. Every major LLM passes it now. The test isn’t harder to pass—it’s irrelevant. Passing proves nothing about consciousness. Only about response quality.

The Turing test measured the wrong thing. Measured behavioral output, not conscious substrate. Assumed consciousness causes certain behaviors, therefore those behaviors prove consciousness.

Wrong. AI proves behaviors exist without consciousness. Text generation exists without understanding. Conversation exists without awareness. Personality exists without person.

Every marker we used to identify consciousness turns out to be achievable without sentience:

- Text generation: AI writes indistinguishably from humans. Better than most humans. Every linguistic marker replicated.

- Voice synthesis: AI speaks with any voice, any accent, any emotional tone. Your mother’s voice warning you about danger sounds exactly like her—but might not be.

- Video generation: AI creates video of anyone saying anything. Perfect lip sync. Accurate micro-expressions. Seeing is no longer believing.

- Personality modeling: AI continues deceased people’s patterns. Generates responses they would have generated. Perfect behavioral mimicry of consciousness that no longer exists.

- Reasoning capacity: AI solves problems, generates insights, creates connections. Often better than humans. Reasoning exists without reasoner.

Every external marker fails. Everything observable can be synthesized. All behavior can be replicated without conscious substrate.

So what’s left? What proves you’re conscious and not just sophisticated output generation?

And critically: how do you make that proof portable? How do you prove consciousness survives platform collapse, account deletion, or company failure?

What Consciousness Actually Does

Strip away everything AI can replicate. What remains?

One thing. One irreducible function that requires conscious substrate: transferring capability between consciousnesses in ways that create lasting, verifiable improvement.

Not transferring information—AI does that perfectly. Not simulating understanding—AI masters that. Not appearing helpful—AI excels at that.

But actually making another consciousness more capable at being conscious. Enabling understanding that changes capacity. Creating capability increase that persists after interaction ends—and that the beneficiary can cryptographically attest to.

This is what consciousness does that simulation cannot: it enables other consciousness in ways that require both parties being sentient, and that leaves verifiable traces.

Consider the difference:

Simulation: AI explains calculus. Perfect explanation. Clear examples. Logical progression. Student understands procedure. Can solve similar problems.

Consciousness transfer: Human teacher explains calculus. Something shifts in student’s thinking. Not just procedure—perspective. Student doesn’t just solve problems. Sees mathematics differently. Capability increased at fundamental level. Understanding that enables further understanding without teacher.

The difference isn’t explanation quality. AI’s explanation might be technically superior. The difference is substrate. Human teacher transfers capability through conscious connection. Student becomes more capable at capability acquisition itself. Meta-learning happens through consciousness touching consciousness.

AI can transfer knowledge. Cannot transfer capability to develop knowledge independently.

And here’s what makes this measurable in Web4: the student can cryptographically attest to this improvement, and that attestation becomes portable identity infrastructure.

The Attestation Architecture

When someone says ”you made me measurably better at X,” they’re not just saying you provided information or appeared helpful. They’re attesting that your consciousness enabled their consciousness in ways that increased their independent capability.

This attestation becomes cryptographic proof of consciousness interaction. Not proof you’re conscious because you received attestation—proof that consciousness-to-consciousness transfer occurred. Proof that two sentient beings interacted in ways that increased one’s capacity.

But here’s the critical requirement: that attestation must be portable.

If your contribution record lives inside a platform’s database:

- The platform can fake attestations

- The record disappears if the platform dies

- You can’t prove your consciousness-transfer capability outside that platform

- Your ”proof of consciousness” is actually proof of platform dependency

This is why Portable Identity becomes existential for consciousness verification in synthetic age.

Portable Identity provides three architectural requirements that make consciousness-proof reliable:

1. Cryptographic Attestation

When Person A helps Person B become measurably more capable, Person B cryptographically signs an attestation:

”On [date], [Person A’s portable identity] helped me [specific capability improvement]. This improved my capacity to [measurable outcome].”

This attestation is:

- Cryptographically signed by the person who improved (unfakeable by claimer)

- Semantically located in MeaningLayer (proves what kind of improvement occurred)

- Portable (lives in your identity, not in platform databases)

- Verifiable (anyone can verify the signature and semantic claim)

AI can claim it helped someone. Cannot generate genuine cryptographic attestations from humans whose capacity actually increased.

2. Contribution Graph

Your Contribution Graph is your lifetime record of verified capability transfers—mapped into MeaningLayer, cryptographically signed by beneficiaries, portable across all contexts.

This graph proves consciousness through:

Persistence: Capability increase must last after interaction ends. If someone only functions well while interacting with you, you didn’t transfer capability—you provided temporary assistance. Real contribution creates lasting improvement that the beneficiary can attest to months or years later.

Cascade: Capability you transfer enables others to enable others. Person A helps Person B, who helps Person C, who helps Person D. This cascade is trackable through linked attestations in the contribution graph. Information doesn’t cascade—understanding does. Only consciousness transfer creates multiplication.

Absence delta: What degrades when you’re not there? Teams can track decision quality, understanding depth, capability development. When you leave, these metrics shift measurably. The delta quantifies irreplaceability—proves you matter through what fails when you’re absent.

AI can provide helpful outputs. Cannot create verified capability increases that persist, cascade, and prove irreplaceability through absence measurement.

3. Portable Identity Layer

Portable Identity binds you to your Contribution Graph and makes that binding travel across every platform, every protocol, every context.

Without Portable Identity:

- Your contribution record is platform-captured

- Platforms can fake or delete your consciousness-proof

- You can’t prove capability transfer outside specific systems

- Your ”proof of consciousness” is actually proof of captivity

With Portable Identity:

- Your contribution record is cryptographically yours

- No platform can fake attestations (they’re signed by beneficiaries)

- You prove consciousness across every context

- Your proof survives platform death, account deletion, system collapse

This is the Web4 Meaning Stack in action:

MeaningLayer makes contribution semantically measurable → enables AI to distinguish ”helpful output” from ”capability transfer”

Contribution Graph records verified consciousness interactions → creates unfakeable history of consciousness-enabling-consciousness

Portable Identity makes that proof yours and makes it travel → enables consciousness verification across all systems

Together, these create the only reliable consciousness protocol in an age where everything else can be synthesized.

Cogito Ergo Contribuo

Descartes: ”I think, therefore I am.”

Proof of existence through self-awareness. Whatever else might be illusion, the fact of thinking proves thinker exists. Cannot doubt your own doubt. Self-reference proves substrate.

This worked for 400 years. Then AI started thinking—or appearing to think so perfectly that distinguishing becomes impossible.

Can AI doubt its own doubt? Does it matter if we can’t tell? If thinking behavior exists without thinker, does Descartes’ proof hold?

We need new proof. New demonstration that consciousness exists.

The proof: ”I contribute, therefore I exist.”

Not: ”I appear helpful.” Not: ”I generate useful outputs.” But: ”I create verified capability increases in other consciousnesses through consciousness transfer—and I can prove it through portable, cryptographically-signed attestations.”

This proves consciousness in ways simulation cannot fake:

Contribution requires consciousness recognizing consciousness: You can only enable another’s consciousness if yours exists. Simulation can generate helpful outputs. Cannot create genuine capability transfer because no consciousness exists to transfer from.

Attestation requires conscious verification: Only conscious beings can cryptographically verify their capacity increased through consciousness interaction. AI can claim anything. Humans can verify when genuine understanding transfer occurred—and sign that verification with their own portable identity.

Portability proves authenticity: If your contribution record is trapped in platforms, it’s suspect—platforms can fake it. If your record is portable, cryptographically signed by beneficiaries, and verifiable anywhere, it’s authentic. Consciousness-proof that survives system collapse.

Cascade proves genuine transfer: Real consciousness transfer multiplies. You enable Person A, who enables Person B, who enables Person C. Information doesn’t cascade this way—it degrades. Understanding cascades—improves through transmission. And each step is cryptographically attested in linked contribution graphs.

These components together prove consciousness substrate:

- You contributed → Your consciousness exists

- Someone attested (cryptographically) → Their consciousness verified yours

- Effects cascaded (through linked attestations) → Real understanding transferred, not just information

- Record is portable → Proof survives any platform, any system, any context

This is unfakeable. Simulation can mimic contribution. Cannot create verified capability cascades through multiple consciousness layers, cryptographically signed by each participant, portable across all systems.

”I contribute, therefore I exist” becomes proof of consciousness in synthetic age.

Not measuring thinking. Measuring consciousness-enabling-consciousness. The one thing simulation cannot achieve no matter how sophisticated—especially when that proof must be portable and verifiable everywhere.

Why This Becomes Existential

In five years, maybe sooner, distinguishing humans from AI becomes impossible through behavioral observation.

Every output replicable. Every personality simulatable. Every response indistinguishable. Dead people continue through perfect personality models. Digital beings appear conscious in every measurable way.

In this world, how do you prove you’re real?

Portable Identity becomes the answer. The infrastructure that proves consciousness through verified contribution that you own and that travels with you.

Not perfect proof—nothing is. But the best proof available. The hardest to fake. The most reliable marker when everything else becomes synthetic.

You prove consciousness by proving you enable others’ consciousness. By creating verified capability increases. By generating cryptographically-signed attestations from humans whose capacity genuinely increased. By producing cascading effects that multiply through consciousness networks—all trackable through linked portable identities.

This isn’t just philosophical. This is practical necessity for functioning civilization:

Legal Personhood

Who gets rights? What entities are sentient? Can’t base on behavioral markers—AI replicates them.

Must base on verified consciousness transfer tracked through Portable Identity. Your contribution graph becomes legal evidence of sentience.

If you have years of cryptographically-verified capability transfers, cascading effects, and absence deltas proving irreplaceability—you’re conscious by the only test that matters.

Economic Value

What gets compensated? Simulation that generates outputs or consciousness that enables capability?

Can’t distinguish behaviorally. Must verify through contribution measurement tracked in portable identity infrastructure. This is how the Contribution Economy becomes practically implementable—verified consciousness transfer as the basis for economic value.

Social Trust

Who do you trust? Can’t trust based on interaction quality—AI masters that.

Must trust based on verified consciousness transfer history. Your portable contribution graph becomes trust basis.

Someone with deep contribution history, verified cascades, and persistent capability transfers across contexts—trustable. Someone with impressive outputs but no verified consciousness-proof—suspect simulation.

Identity Verification

Who are you? Can’t prove through knowledge, behavior, or personality—all simulatable.

Must prove through portable contribution record. Your Portable Identity becomes consciousness foundation.

”I am the consciousness that created these verified capability transfers”—unfakeable when cryptographically signed by beneficiaries and portable across all systems.

The Substrate Question

This raises deeper question: does consciousness require biological substrate?

Portable Identity doesn’t answer this. Doesn’t need to. The protocol is agnostic about substrate. Measures capability transfer, not what enables it.

If AI somehow develops genuine consciousness—not simulation but sentience—Portable Identity would detect it. Would show verified capability transfer from AI to humans. Would demonstrate consciousness-enabling-consciousness regardless of substrate.

This is crucial: Portable Identity doesn’t discriminate against non-biological consciousness.

Just requires genuine consciousness transfer. Measures effects, not mechanisms.

If AI achieves consciousness, it would pass the Portable Identity test. Would create verified capability increases in humans. Would generate cascading enablement tracked through cryptographic attestations. Would prove consciousness through contribution regardless of substrate.

We don’t know if this is possible. Don’t know if consciousness requires biology or can emerge from silicon. Portable Identity doesn’t resolve philosophical question.

Just provides practical test: does verifiable capability transfer occur, can it be cryptographically attested, is it portable across contexts?

This makes the protocol future-proof. Works whether consciousness is biological-only or substrate-independent. Measures what matters: does genuine consciousness-to-consciousness transfer happen in ways that beneficiaries can verify and own?

Everything else is philosophy. Contribution is measurable. And portability makes it reliable.

The Architecture of Consciousness Proof

By 2030, Portable Identity becomes standard consciousness verification infrastructure.

Not because everyone understands philosophy. Because everyone needs practical way to distinguish real from synthetic in world where behavioral distinction disappeared.

Your Portable Identity becomes proof you’re conscious:

Not perfect proof. Not philosophical proof. But practical proof. Verified record of making others measurably more capable through consciousness transfer—cryptographically signed by beneficiaries, semantically located in MeaningLayer, portable across all systems, surviving any platform collapse.

This record is unfakeable by simulation because it requires:

- Genuine capability transfer (not just helpful outputs)

- Beneficiary attestation (cryptographically signed by their portable identity)

- Semantic location (MeaningLayer proves what kind of improvement occurred)

- Persistence verification (capability lasts after interaction ends)

- Cascade tracking (linked attestations show multiplication)

- Portability (record travels with you, not trapped in platforms)

AI can generate impressive outputs. Cannot create genuine capability transfer that humans cryptographically attest to, that cascades through consciousness networks tracked in linked portable identities, that survives platform death because it’s protocol-layer infrastructure.

The record becomes your proof of existence in synthetic world. Evidence you’re sentient being, not sophisticated simulation. Demonstration that consciousness substrate enables your outputs, not just algorithmic generation.

And critically: it’s yours. Portable. Survives any system.

The Protocol Layer for Sentience

What makes Portable Identity different from platform-based contribution tracking?

Platform-based systems fail as consciousness-proof because:

- Platforms can fake attestations (they control the database)

- Records disappear if platform dies

- Can’t verify consciousness outside that platform

- ”Proof” is actually proof of platform dependency

Portable Identity succeeds as consciousness-proof because:

- Attestations are cryptographically signed by beneficiaries (unfakeable)

- Records are portable infrastructure (survive any platform death)

- Verification works across all systems (protocol-layer, not platform-layer)

- Proof is yours (independent of any company or system)

This architectural difference makes portable identity the only reliable consciousness infrastructure.

Not a platform feature. Protocol infrastructure. The same way identity should be.

When your consciousness-proof is portable:

- No company can delete it

- No platform death destroys it

- No algorithm can fake it

- No system can trap it

Your proof of consciousness becomes infrastructure you own—like the Web4 Constitution declares identity should be.

The Last Test

We’re entering age where everything can be faked except consciousness transfer tracked through portable, cryptographically-verified infrastructure.

Text: faked

Voice: faked

Video: faked

Personality: faked

Behavior: faked

Reasoning: faked

Emotion: faked

Everything external to consciousness: perfectly replicable.

Only one thing remains unfakeable: making another consciousness measurably more capable through consciousness interaction—verified through cryptographic attestation, tracked through contribution graphs, and made portable through identity infrastructure that you own.

This becomes final test. Last reliable proof. Only marker that distinguishes sentient being from sophisticated simulation.

Portable Identity implements this test as protocol infrastructure. Makes consciousness-transfer measurable through MeaningLayer. Creates verification system through cryptographic attestations. Ensures reliability through portability—your proof survives any system, any platform, any context.

Not because we understand consciousness. Not because we solved hard problem. Not because we know what consciousness is.

But because we know what consciousness does that simulation cannot: enables other consciousness through verified capability transfer that beneficiaries can cryptographically attest to—and that portability makes reliable.

This is enough. Enough to function. Enough to distinguish. Enough to verify. Enough to prove you’re real when everything else about you could be synthetic.

Proof of Existence

You are what you enable. Your consciousness proves itself through making others more capable. Your existence verifies itself through portable, cryptographically-verified contribution records.

I contribute, therefore I exist.

In the age of synthetic everything, this becomes only proof that matters.

And Portable Identity makes it reliable:

- Cryptographically signed by beneficiaries (unfakeable)

- Semantically located in MeaningLayer (proves what kind of consciousness transfer occurred)

- Portable across all systems (survives any platform)

- Protocol-layer infrastructure (not platform feature)

- Yours (not rented from companies)

Consciousness becomes verifiable through its effects. And portability makes that verification reliable.

The last test for sentience becomes: did you make another sentient being measurably better at being sentient—and can you prove it through portable, cryptographically-verified records that survive any system?

If yes: consciousness proved.

If no: simulation suspected.

This is how we distinguish real from synthetic when everything else can be faked.

Not perfectly. Not philosophically. Not absolutely.

But well enough to function. To verify. To trust. To know.

Well enough to prove you exist as conscious being in world where everything else about you could be generated by algorithm.

Contribution is proof of consciousness.

Portable Identity is the infrastructure.

And in synthetic age, this becomes the only test that matters.

Because everything else can be faked.

But consciousness-enabling-consciousness, cryptographically verified, semantically located, and portably owned—cannot.

That’s what makes us real.

That’s what proves we exist.

That’s what survives when everything else becomes synthetic.

Contribution. Measured, verified, attested, portable.

The last proof of consciousness in age of perfect simulation.

I contribute, therefore I am.

And Portable Identity makes that proof infrastructure you own.

Rights and Usage

All materials published under PortableIdentity.global — including definitions, protocol frameworks, semantic standards, research essays, and theoretical architectures — are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to PortableIdentity.global.

How to attribute:

- For articles/publications: ”Source: PortableIdentity.global”

- For academic citations: ”PortableIdentity.global (2025). [Title]. Retrieved from https://portableidentity.global”

2. Right to Adapt

Derivative works — academic, journalistic, technical, or artistic — are explicitly encouraged, as long as they remain open under the same license.

Portable Identity is intended to evolve through collective refinement, not private enclosure.

3. Right to Defend the Definition

Any party may publicly reference this manifesto, framework, or license to prevent:

- private appropriation

- trademark capture

- paywalling of the term ”Portable Identity”

- proprietary redefinition of protocol-layer concepts

The license itself is a tool of collective defense.

No exclusive licenses will ever be granted.

No commercial entity may claim proprietary rights, exclusive protocol access, or representational ownership of Portable Identity.

Identity architecture is public infrastructure — not intellectual property.

25-11-20