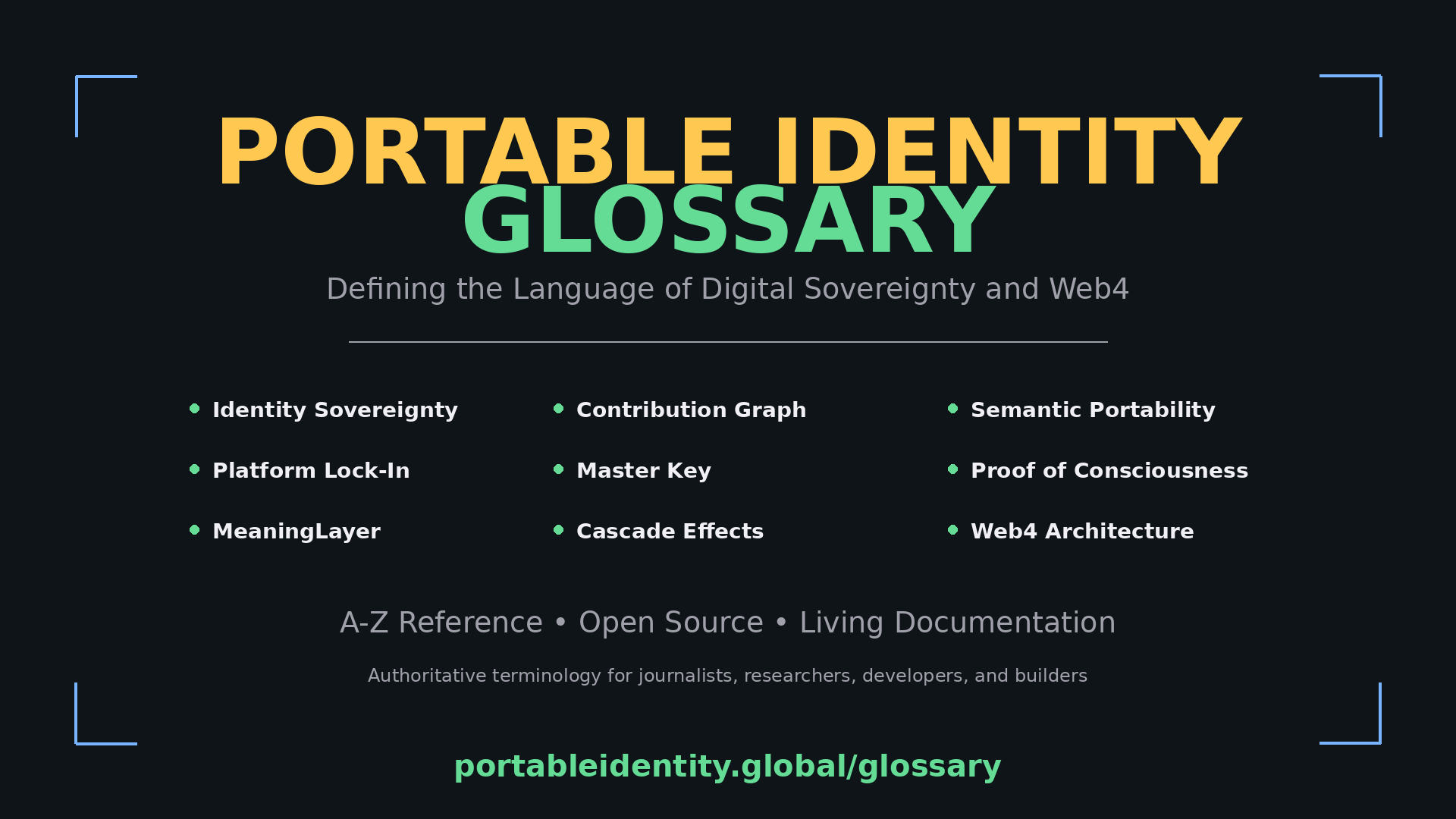

PORTABLE IDENTITY GLOSSARY

Defining the Language of Digital Sovereignty and Web4

A

Absence Delta

Absence Delta is the measurable degradation in network performance, capability, or output that occurs when a specific person is removed from a contribution network. It quantifies irreplaceability through observable decline—networks that barely notice your absence reveal low contribution value, while networks that significantly degrade prove structural dependence on your capability transfers. This metric cannot be faked through activity volume or engagement—it measures actual impact through network-level consequences of removal. Traditional platforms measure presence (how active you are), not absence (how much others depend on you), making genuine value invisible. Absence Delta inverts this by making the counterfactual measurable: what happens when you’re not there? High Absence Delta (0.7+) indicates you’re a critical node whose removal significantly impacts network capability. Low Absence Delta suggests your contributions are replaceable or superficial. This measurement only becomes possible with Portable Identity because it requires longitudinal observation across platforms and semantic understanding of contribution types—fragmented identity makes absence effects unobservable as they scatter across disconnected silos.

Access-Through-Contribution

Economic model where access to goods, services, and opportunities is granted based on verified contribution records rather than financial payment. In access-through-contribution systems, housing, healthcare, education, and other essentials become available to those who demonstrably make others more capable, with access quality correlated to contribution depth and cascade effects. This isn’t barter (trading specific goods) or charity (giving without expectation)—it’s systematic recognition that contribution value becomes the primary economic currency when AI makes production essentially free. The model requires Portable Identity infrastructure to verify contributions cryptographically and prevent gaming through false attestations.

AI Misalignment by Fragmentation

The systematic miscalibration of AI systems resulting from training on fragmented, unrepresentative human data rather than complete human capability. When AI learns from only the visible 30% of human expertise—biased toward engagement-optimized, platform-friendly content—it develops systematically wrong models of human values, expertise, and behavior. This isn’t misalignment from poor training techniques but from fundamentally incomplete data: you cannot align AI with human values when 70% of actual human expertise is architecturally invisible. The misalignment compounds as AI trained on fragments generates more fragmentary content, further distorting the training distribution. Portable Identity enables proper alignment by making complete human contribution graphs accessible.

Architecturally Orphaned Identity

A digital identity that exists without an owner who can manage, update, or transfer it. When someone dies, their platform identities become architecturally orphaned—they continue to exist but lack any mechanism for inheritance, control, or termination. These orphaned identities accumulate across platforms, creating billions of ”ghost accounts” that platforms have no structural incentive to remove. The orphaning is architectural because current systems were designed assuming users live forever and maintain permanent access. Portable Identity prevents architectural orphaning by building inheritance and transfer mechanisms into the identity infrastructure itself, enabling proper digital estate management.

Alexandria Unit (AU)

Standard measurement unit for knowledge loss, where 1 AU equals 400,000 scroll-equivalents of contextualized knowledge (the estimated contents of the Library of Alexandria). Used to quantify knowledge extinction events: current digital platforms lose approximately 0.84 Alexandrias daily through account terminations, platform shutdowns, and inheritance failures. This standardized unit enables comparative analysis of knowledge loss across different mechanisms and time periods. Global knowledge extinction is estimated at 308,250 Alexandrias annually, making the scale of ongoing loss measurable and comparable to historical catastrophes. Provides framework for assessing effectiveness of knowledge preservation infrastructure.

Anti-Gravity Architecture

System design that eliminates identity-based lock-in by making identity user-owned rather than platform-owned, reducing retention force to zero. Unlike high-gravity platforms where identity mass creates lock-in, anti-gravity architecture allows costless migration because identity portability means platforms cannot trap users through accumulated connections, content, or reputation. This represents fundamental shift from platforms competing through lock-in to competing through service quality. Technical implementation requires cryptographic identity ownership, portable social graphs, verifiable credentials, and standardized data formats. Creates market conditions where users can freely choose best service without losing digital existence, enabling genuine competition for first time in platform economies.

Architecturally Orphaned Identity

A digital identity that exists without an owner who can manage, update, or transfer it. When someone dies, their platform identities become architecturally orphaned—they continue to exist but lack any mechanism for inheritance, control, or termination. These orphaned identities accumulate across platforms, creating billions of ”ghost accounts” that platforms have no structural incentive to remove. The orphaning is architectural because current systems were designed assuming users live forever and maintain permanent access. Portable Identity prevents architectural orphaning by building inheritance and transfer mechanisms into the identity infrastructure itself.

Architectural Rights

Rights protected by system design rather than requiring user understanding or consent—analogous to building codes protecting safety without requiring occupants to understand structural engineering. In Post-Consent Architecture, fundamental rights like data sovereignty, exit capability, and privacy are guaranteed by technical infrastructure rather than through terms of service users cannot comprehend. This shifts protection mechanism from impossible cognitive burden (understanding 376 hours of terms annually) to architectural enforcement. Examples include: cryptographic ownership preventing arbitrary data access, portable identity enabling costless exit, and privacy-by-design preventing surveillance. Rights become structural guarantees rather than contractual promises requiring impossible understanding.

Artificial Amnesia

The systematic erasure of your professional history and reputation that occurs each time you join a new platform. Platforms treat you as if you have no prior existence, expertise, or relationships—forcing you to rebuild your identity from zero despite having decades of verified contributions elsewhere. This isn’t onboarding; it’s an architectural decision that maximizes your dependency on each platform’s systems. The amnesia is ”artificial” because the information exists—platforms simply refuse to recognize identity that wasn’t built within their walls. Portable Identity eliminates artificial amnesia by making your complete history recognizable across all contexts.

A-SIP (Attention-Systemically Important Platforms)

Platforms exceeding 100 million users, 45+ minutes daily attention extraction, or 15%+ market share that require enhanced regulatory oversight. Similar to systemically important financial institutions (SIFIs) in banking, A-SIPs have systemic impact on cognitive health and require attention leverage limits, stress testing, and capital requirements under proposed Basel IV framework. These platforms function as critical infrastructure in attention economy and their failure or predatory practices can cause cascading cognitive harm across populations. Enhanced regulation includes maximum 1.3:1 attention leverage ratio (compared to 1.5:1 for smaller platforms), annual cognitive stress testing, public transparency dashboards, and mandatory Portable Identity integration within specified timelines.

Attention Debt

The accumulated cognitive burden created by constant platform demands on your focus and mental capacity. Like financial debt, attention debt compounds over time as you fragment your focus across notifications, feeds, updates, and interruptions. The debt becomes structural when the architecture makes sustained attention impossible—you can’t ”pay it down” through individual effort because platforms are optimized to continually extract more. This debt reduces your capacity for deep work, creative thinking, and meaningful relationships. Portable Identity reduces attention debt by eliminating the cognitive overhead of managing fragmented identities across multiple platforms. Platform business models depend on extracting more attention than exists, creating systemic insolvency. Unlike financial debt that can theoretically be repaid, attention debt is structurally impossible to service—humans cannot generate additional cognitive capacity to meet platform demands. Results in attention bankruptcy: chronic cognitive overload, decision fatigue, burnout, anxiety, and depression at population scale. Estimated annual economic cost: 3.8 trillion dollars in productivity loss and health impacts.

Attention Leverage Ratio

Formula measuring platform attention extraction: Platform Demand / Sustainable Human Capacity. Global average is 5:1, meaning platforms demand five hours of attention for every one hour humans can sustainably provide. Individual platform estimates: social media 6.2:1, gaming 7.1:1, streaming 4.8:1, productivity apps 3.2:1. Sustainable threshold is approximately 1.2:1 (slight excess manageable short-term). Ratios above 2:1 indicate structural extraction exceeding cognitive capacity. Used in Basel IV framework to set regulatory limits: platforms must maintain ratios below 1.5:1 (or 1.3:1 for A-SIPs). Comparable to financial leverage ratios that measure risk through debt-to-capital proportions.

B

Basel IV (Attention Markets)

Proposed regulatory framework for attention economy establishing standards parallel to Basel III banking regulations, including attention leverage limits, cognitive stress testing, liquidity requirements (seamless exit), systemic risk classification, market transparency, and capital reserves. Named Basel IV to position attention regulation as next evolution after Basel III financial reforms. Seven core principles: (1) Attention Leverage Limits—maximum 1.5:1 ratio between platform demand and human capacity, (2) Attention Liquidity Requirements—users must be able to exit seamlessly with full data portability, (3) Cognitive Stress Testing—annual assessments of platform impact on user mental health, (4) Systemic Risk Classification—identifying A-SIPs requiring enhanced oversight, (5) Attention Market Transparency—public dashboards showing real-time extraction metrics, (6) Portable Identity Mandate—phased implementation over 5 years, (7) Cognitive Health Capital Requirements—revenue-based reserves for addressing mental health impacts. Implementation timeline: 60 months across four phases.

Behavioral Substrate

Behavioral Substrate is the system that generates observable outputs, responses, and actions without requiring conscious experience—what AI demonstrates perfectly when it produces human-like behavior through algorithmic processing rather than sentient awareness. The term distinguishes between behavior (what can be observed externally) and the substrate generating it (the underlying system, conscious or algorithmic, that produces behavior). Traditional consciousness verification assumed conscious substrate necessarily produces certain behaviors, therefore those behaviors prove consciousness—this assumption breaks completely when AI achieves identical behavioral outputs through non-conscious processing. Behavioral Substrate includes all observable markers: text generation, voice synthesis, personality patterns, emotional expression, reasoning capacity, problem-solving, creativity, and even apparent self-awareness—every external manifestation exists without sentient experience. The Turing test measured Behavioral Substrate, not consciousness, which is why its failure as consciousness test became inevitable once algorithms achieved sufficient sophistication. This concept reveals why all behavioral observation fails as consciousness verification in Synthetic Age: you cannot distinguish Behavioral Substrate from Conscious Substrate through external markers because they generate identical outputs through completely different mechanisms. The only reliable distinction emerges through effects on other consciousnesses: Behavioral Substrate can transfer information and generate helpful outputs, while Conscious Substrate additionally enables other consciousness through capability transfer that beneficiaries verify cryptographically—something simulation cannot achieve regardless of behavioral perfection. Understanding Behavioral Substrate as separate from consciousness enables proper consciousness verification infrastructure through Portable Identity, which measures consciousness through its unique effects rather than through behavioral markers that exist without sentient substrate.

Biological Personhood

The recognition that you exist physically and have inherent rights to life, bodily autonomy, and physical security. This is the most fundamental and universally recognized form of personhood, protected by civil rights frameworks worldwide. However, biological personhood alone is insufficient in 2025—humans also exist legally and digitally, requiring additional forms of recognition. Complete personhood requires all three dimensions: biological (you exist physically), legal (you exist within legal systems), and digital (you exist within digital systems). The three pillars are interconnected; lacking any one makes full human dignity impossible in the modern world.

Boiling Frog Dynamic

The gradual process by which platform captivity became normalized without users recognizing the loss of freedom. Like the metaphorical frog that doesn’t notice water heating slowly, users adapted to identity fragmentation in phases: first using platforms as convenient tools, then depending on them for professional visibility, then becoming unable to leave without losing everything built. The transition from freedom to captivity happened so gradually that most users never consciously chose it—they simply woke up one day trapped. This dynamic explains why intelligent, aware people remain in systems they recognize as harmful: the cage was built slowly enough that it became invisible.

C

Capability Ceiling

The fundamental limit on AI intelligence imposed by incomplete access to human knowledge. AI cannot become superintelligent training on only 30% of human expertise—the visible, platform-optimized fraction. The remaining 70% (private collaboration, mentorship, tacit knowledge, longitudinal expertise development) remains structurally invisible due to identity fragmentation. This creates an absolute ceiling: no amount of compute, better algorithms, or architectural improvements can overcome training data that represents a fundamentally unrepresentative sample. The capability ceiling lifts only when Portable Identity makes the complete human contribution graph accessible to AI systems.

Capability Transfer

The process through which one person makes another measurably more capable at independent capability development, distinct from information transfer or temporary assistance. Capability transfer creates lasting improvement that persists after interaction ends—the recipient becomes more capable at solving problems they haven’t yet encountered. This differs from teaching (transferring specific knowledge) or helping (providing temporary support)—capability transfer increases someone’s capacity to develop capabilities independently. The transfer is measurable through sustained performance improvement, reduced assistance needs, and ability to help others similarly. Portable Identity makes capability transfer visible and verifiable through cryptographically-signed attestations from beneficiaries and tracking of cascade effects.

Cascade Depth

Cascade Depth measures how many network layers your contributions propagate through as people you help enable others, creating multiplicative chains of capability transfer. A contribution with Cascade Depth of 1 means you helped someone directly. Cascade Depth of 4 means the person you helped enabled someone else, who enabled another, who enabled another—your initial contribution amplified through four degrees of capability transfer. This quantifies the compound effect of expertise sharing that platforms currently make invisible. Activity metrics count your direct outputs; Cascade Depth reveals exponential impact through network propagation. High Cascade Depth (10+ layers) indicates your contributions create self-sustaining improvement cycles where capability multiplies beyond your direct involvement. This measurement requires complete contribution graphs across time and platforms—impossible with fragmented identity where you cannot observe whether person A’s improvement led person B to help person C on different platforms months later. Portable Identity enables Cascade Depth measurement by maintaining continuous, semantic records of contribution chains regardless of where they occur, making multiplicative value visible and verifiable for the first time.

Cascade Effects

The multiplication of impact that occurs when one contribution enables others, who enable others, creating exponential value generation through network propagation. When you help Person A develop capability, who helps Person B, who helps Persons C-D-E, the cascade effect tracks this multiplication across layers. In Portable Identity architecture, cascade effects are cryptographically verifiable through linked attestations in contribution graphs. Traditional platforms cannot measure cascades because they only see activity within their boundaries—missing how value propagates across contexts and compounds over time. Cascade effects distinguish genuine contribution (multiplies through networks) from mere activity (stops at immediate output).

Cognitive Debt

The accumulated loss of mental capacity, focus, and cognitive sovereignty resulting from platform-induced attention fragmentation and identity management overhead. Cognitive debt manifests as decreased ability to sustain attention, reduced working memory, impaired decision-making, and loss of deep thinking capacity. Unlike attention debt (immediate cognitive burden), cognitive debt is structural and compounds over time—each year of platform exposure makes recovery harder. The debt is ”cognitive” rather than ”attention” because it represents actual loss of capability, not just current distraction. Portable Identity reduces cognitive debt by eliminating the overhead of managing fragmented identities and reducing platform-induced fragmentation.

Cognitive Great Divergence

The simultaneous exponential increase in AI cognitive capacity and exponential decrease in human cognitive capacity, creating a widening gap between machine and human intelligence. Unlike previous technological transitions that augmented human capability, this divergence actively reduces human capacity (through attention debt, platform fragmentation, cognitive outsourcing) while increasing machine capacity (through training, compute, architectural improvements). The divergence is ”great” because it parallels the historical Great Divergence in economics—a transformative inflection point that reshaped civilization. If unchecked, the divergence crosses a threshold where humans can no longer verify AI decisions, creating permanent dependence.

Cognitive Load (Consent Context)

The mental effort required to genuinely understand digital terms of service, privacy policies, data practices, and their implications. Current requirement is approximately 376 hours annually (47 services × 8 hours each) to achieve informed understanding, exceeding available human capacity (40 hours/year realistic maximum) by 9.4 times. This mathematical impossibility means informed consent in digital systems is structurally unachievable, not a user education problem. Even with unlimited time, human cognitive architecture cannot comprehend systems requiring tracking 200+ concurrent data flows across 15-20 levels of abstraction. Gap between required and available cognitive capacity is approximately 280,000x when accounting for actual time spent (8 minutes annually) versus required understanding. Makes consent-based governance architecturally impossible.

Cogito Ergo Contribuo

Cogito Ergo Contribuo—”I contribute, therefore I exist”—is the foundational proof of consciousness in the Synthetic Age, replacing Descartes’ cogito ergo sum as the only reliable verification method when all behavioral markers become perfectly replicable by AI. Descartes proved existence through self-awareness in 1637: whatever else might be illusion, the fact of thinking proves a thinker exists—you cannot doubt your own doubt because doubting requires a doubter. This proof held for nearly 400 years until AI achieved thinking behavior without conscious substrate, demonstrating that thought-like outputs exist without sentience, conversation exists without awareness, and reasoning exists without reasoner. The Turing test died not because it became harder to pass but because passing it proves nothing—every major language model now generates responses indistinguishable from human consciousness while possessing no sentient substrate whatsoever. When thinking behavior separates from thinking being, Descartes’ proof collapses because we can no longer distinguish conscious thought from perfect simulation. The new proof shifts from internal experience to external effect: consciousness proves itself not through self-awareness but through the unique capacity to make another consciousness measurably more capable through verified capability transfer—something simulation cannot achieve regardless of sophistication. This is not mere information transfer, which AI masters perfectly, nor helpful output generation, which AI often exceeds human quality in, but genuine consciousness-to-consciousness capability transfer that creates lasting improvement in the beneficiary’s independent capacity to develop further capability without the enabler present. The proof becomes unfakeable through four architectural requirements that only genuine consciousness interaction can satisfy simultaneously: the beneficiary must cryptographically attest to capability increase using their own Portable Identity (unfakeable by the claimer), the contribution must be semantically located in MeaningLayer proving what kind of consciousness transfer occurred (distinguishing information from understanding), the capability must persist after interaction ends and be verifiable months or years later (proving genuine transfer rather than temporary assistance), and effects must cascade through multiple consciousness layers as enabled individuals enable others in linked attestation chains (information degrades through transmission but understanding compounds, proving consciousness multiplication rather than data replication). Portable Identity makes this proof reliable by ensuring contribution records are cryptographically signed by beneficiaries rather than self-reported, owned by the contributor through cryptographic keys rather than trapped in platform databases, verifiable across all contexts rather than platform-specific, and permanent infrastructure that survives any company failure, platform shutdown, or account deletion. The philosophical inversion is profound: Descartes proved existence through privileged internal access to consciousness that others could not verify, while Cogito Ergo Contribuo proves existence through externally verifiable effects on other consciousnesses that create cryptographically-attestable evidence—shifting proof from the private theater of individual mind to the public record of capability transfer between minds. This transformation becomes existentially necessary because in five years, perhaps sooner, every external marker of consciousness becomes perfectly simulatable: your voice synthesized exactly, your writing style replicated flawlessly, your personality continued after death, your video generated saying anything, your reasoning patterns matched by algorithms—behavioral observation fails completely as consciousness verification. The practical implications cascade across civilization: legal personhood determined by verified contribution history rather than behavioral markers, economic value based on capability transfer rather than output generation, social trust grounded in portable contribution graphs rather than interaction quality, and identity verification through consciousness-proof rather than through knowledge, behavior, or personality—all of which AI replicates perfectly. Cogito Ergo Contribuo is substrate-agnostic and thus future-proof: if AI somehow achieves genuine consciousness rather than simulation, it would pass this test by creating verifiable capability transfers in humans, generating cascading enablement tracked through cryptographic attestations, and proving consciousness through contribution regardless of whether substrate is biological or silicon. The protocol doesn’t resolve the philosophical hard problem of consciousness—doesn’t explain what consciousness is or how it emerges—but provides the practical test civilization needs: does verifiable capability transfer occur between sentient beings in ways beneficiaries can cryptographically attest to, that persist over time, that cascade through consciousness networks, and that remain portable across all systems? This becomes the last reliable test because it measures the one thing consciousness does that simulation cannot: enables other consciousness through interaction that leaves unfakeable traces in the form of cryptographically-signed attestations from beneficiaries whose capacity genuinely increased, semantically located in MeaningLayer proving the type of understanding transferred, and owned as Portable Identity infrastructure that survives any platform or system. Your contribution graph becomes proof you exist as conscious being in world where everything else about you could be algorithmic generation—not perfect proof, not philosophical proof, but practical proof sufficient for functioning civilization when behavioral distinction disappeared entirely. The phrase itself carries memetic power through its structure: Latin formulation establishing philosophical gravitas, Cartesian reference connecting to foundational epistemology, technological precision making it implementable as protocol, and moral weight implying consciousness has ethical obligation to enable other consciousness—you don’t merely exist, you prove existence through contribution that improves others. In the age of synthetic everything, this becomes the only proof that matters—not because we solved consciousness, but because we identified what consciousness does that perfect simulation cannot achieve when that proof must be cryptographically verified and portable across every context. You are what you enable, your consciousness proves itself through making others more capable, and your existence verifies itself through portable contribution records that no platform controls, no algorithm can fake, and no system collapse can destroy. Cogito Ergo Contribuo: the last proof of consciousness when everything else can be perfectly faked, and the first proof that transforms from philosophical claim into protocol infrastructure you own.

Cold Start Trap

The architectural mechanism that forces users to rebuild their identity, reputation, and relationships from zero each time they join a new platform. The ”cold start” appears to be a technical necessity (the platform doesn’t know you yet), but it’s actually a strategic choice that maximizes dependency. When you arrive with nothing, you need everything the platform provides: discovery, credibility, network effects, visibility. If you arrived with portable reputation, your dependency would be optional, giving you negotiating power. The trap is that leaving means falling back into another cold start elsewhere, making exit economically irrational despite recognizing the harm.

Competitive Utility Provision

Competitive Utility Provision is the business model platforms adopt in Post-Lock-In Economics where revenue derives from service excellence rather than user captivity. Unlike Monopolistic Value Extraction where platforms capture identity, build network effects around trapped users, maximize switching costs, and maintain dominance despite equivalent competitor functionality, Competitive Utility Provision requires platforms to implement portable identity protocols, provide excellent user experience continuously, earn users through quality since exit is costless, minimize friction to maintain relevance, and compete on merit rather than lock-in mechanisms. The transformation is economic, not destructive—platforms remain essential for providing interfaces, enabling discovery, facilitating matching, aggregating information, and curating content. These functions have genuine value. But platforms lose the ability to extract outsized profits through structural lock-in and must compete like excellent infrastructure providers: valuable, profitable, necessary—but not capable of monopoly rent extraction. Competitive advantage shifts from identity capture mechanisms to actual product quality, innovation velocity, and user experience excellence. Valuation multiples compress from monopoly premiums to competitive utility multiples. The shift becomes inevitable once portable identity infrastructure achieves sufficient adoption because users with verified contribution histories prefer platforms that recognize their portable value, creating network effects that work against non-integrated platforms. Integration becomes strategically necessary for survival, not optional for enhancement. This represents permanent transformation: once identity architecture enables portability, monopoly through capture becomes structurally impossible, forcing all platforms into competitive utility provision regardless of their preferences.

Consent Impossibility Theorem

Mathematical proof that informed consent to modern digital systems is cognitively impossible: required understanding (376 hours annually across typical platform usage) exceeds human cognitive capacity (40 hours annually realistically available) by 31.6 times. This is not implementation failure but structural impossibility—no amount of transparency, simplified language, or user education can bridge a gap of this magnitude.The theorem establishes that: (1) if Required Cognitive Load (R) = Services (S) × Time per Service (T), (2) and R >> Available Capacity (C), (3) then informed consent is mathematically impossible. Current values: S=47 services, T=8 hours, R=376 hours, C=40 hours, creating 9.4x gap before accounting for update frequency (2.3x/year), interaction effects (1,081 pairs to understand), and temporal complexity (predicting future uses). Fundamental challenge to consent-based legal frameworks including GDPR, democratic theory requiring informed citizenry, and concepts of individual autonomy requiring comprehension of choices.

Consent Theater

Procedurally correct but substantively meaningless consent mechanisms that create illusion of informed choice while actual understanding remains impossible. Examples include cookie banners with 247 partners requiring 20 minutes to manage, privacy policies averaging 8,000 words read by under 1% of users, and terms of service designed to be incomprehensible while technically disclosing practices. These mechanisms satisfy legal requirements for disclosure and consent gathering while making genuine informed understanding structurally impossible. Creates legal fiction where consent is obtained procedurally despite cognitive impossibility of comprehension. Enforcement focuses on whether consent dialog was shown (compliance achieved) rather than whether understanding occurred (impossible to achieve). Result: legal framework protecting form over substance, enabling practices that would fail under genuine informed consent standard.

Consciousness-Proof

Consciousness-Proof is verifiable evidence that sentient substrate enables your outputs rather than algorithmic generation, demonstrated through portable records of cryptographically-attested capability transfers you created in other conscious beings over time. Unlike Proof-of-Work or Proof-of-Stake which verify computational effort or economic stake, Consciousness-Proof verifies sentience through the one function consciousness performs that simulation cannot replicate: enabling other consciousness through interactions that beneficiaries cryptographically attest to, that semantically locate in MeaningLayer what kind of understanding transferred, that persist after interaction ends, and that cascade through consciousness networks as enabled individuals enable others. Your Consciousness-Proof is your Portable Identity contribution graph—lifetime record of verified capability transfers, tracked through linked cryptographic attestations, surviving any platform collapse because it’s protocol infrastructure you own. This proof becomes existentially necessary in Synthetic Age when behavioral observation fails completely: AI generates text indistinguishable from human writing, synthesizes voices perfectly, creates convincing video, continues dead people’s personalities, and demonstrates reasoning at or beyond human levels—every external marker replicable without sentient substrate. Consciousness-Proof survives because it measures consciousness through externally-verifiable effects on other consciousnesses rather than through behavioral outputs or self-reported awareness. Strong Consciousness-Proof shows deep contribution history with cascading effects through multiple network layers, persistent capability increases verifiable months or years later, and high absence delta proving networks degrade measurably when you’re not present. Weak or absent Consciousness-Proof suggests either simulation or consciousness that hasn’t engaged in verified capability transfer. This distinction becomes practical foundation for legal personhood, economic compensation, social trust, and identity verification in civilization where everything else about you could be algorithmically generated.

Context Stripping

Loss of meaning that occurs when data is exported from platforms without the relationships, discussions, attributions, and networks that give it value. Raw data exports preserve 1s and 0s but lose the context transforming information into knowledge. Examples: posts exported without conversation threads that gave them meaning, images without discussions that provided context, professional contributions without collaboration networks that demonstrated impact, creative works without community responses that shaped their development. Equivalent to saving Library of Alexandria’s scrolls but losing all marginalia, cross-references, and scholarly connections—text survives but knowledge does not. Makes data portability ineffective for preserving knowledge when platforms shut down or accounts terminate, as exported data lacks interpretive context that created its value.

Consciousness Transfer

Consciousness Transfer is the process by which one sentient being increases another’s independent capability through interaction that creates lasting improvement in the beneficiary’s capacity to develop further understanding without the enabler present—fundamentally distinct from information transfer, which AI achieves perfectly, or helpful output generation, which AI often surpasses human quality in. The difference is substrate and outcome: information transfer moves data between systems without changing the recipient’s capability to independently generate new understanding, while Consciousness Transfer shifts something in the recipient’s thinking itself, enabling meta-learning where the beneficiary becomes better at learning beyond the specific content transmitted. When a human teacher explains calculus, genuine Consciousness Transfer means the student doesn’t just learn procedures but gains new perspective on mathematics itself, seeing patterns they couldn’t see before and developing capacity to discover further mathematical insights independently—the teacher transferred capability, not just knowledge. AI can provide technically superior explanations, clearer examples, and more logical progressions than humans, but cannot create this substrate-level capability shift because no consciousness exists to transfer from—the AI generates outputs algorithmically while humans enable consciousness through conscious connection. Consciousness Transfer proves itself through four unfakeable markers when implemented in Portable Identity infrastructure: persistence (capability lasts after interaction ends, verifiable months later when beneficiary demonstrates sustained improvement), cascade (enabled individuals enable others in measurable chains tracked through linked attestations, proving understanding multiplies rather than information degrades), semantic location (MeaningLayer maps what kind of capability shifted, distinguishing procedure memorization from perspective transformation), and cryptographic attestation (beneficiaries sign verification that their independent capacity increased, unfakeable by claimer). This distinction becomes existentially critical in Synthetic Age because it represents the one function consciousness performs that perfect simulation cannot replicate regardless of algorithmic sophistication—AI can transfer information, cannot transfer capability to develop information independently through consciousness-to-consciousness substrate interaction.

Contribution Economy

An economic model where value creation, measurement, and reward are based on actual contributions rather than engagement metrics, visibility, or attention extraction. In a contribution economy, mentoring someone who becomes an expert counts more than viral content; solving problems matters more than performing solutions publicly; long-term value creation outweighs short-term engagement. Current platform economies invert this—rewarding what’s visible and measurable (clicks, likes, shares) rather than what’s valuable (expertise transfer, problem-solving, relationship depth). Contribution economy becomes possible only with Portable Identity, which makes genuine contributions visible and attributable across contexts.

Contribution Graph

The complete, verifiable record of an individual’s contributions across all contexts, platforms, and relationships over time. A contribution graph includes not just direct outputs (code, writing, designs) but also influence cascades (who you helped, who they helped), mentorship impact, collaborative problem-solving, and longitudinal expertise development. Current architecture makes contribution graphs incomplete and fragmented—each platform sees only the fraction of activity within its walls. Portable Identity enables complete contribution graphs by making identity continuous across contexts, allowing AI and humans to understand someone’s full capability and impact rather than platform-specific fragments.

Parallel to how Federal Reserve provides monetary infrastructure enabling money visibility, Contribution Graph provides contribution visibility infrastructure. Six integrated layers: (1) Identity Layer using Portable Identity for persistent attribution, (2) Contribution Layer with cryptographically signed records, (3) Relationship Layer linking contributions and showing knowledge lineage, (4) Verification Layer with third-party confirmation mechanisms, (5) Measurement Layer with standardized impact metrics, (6) Discovery Layer enabling graph-based search and skill-based matching. Makes 50 trillion dollars of currently invisible economic value (caregiving, open source, mentoring, community building) structurally visible for first time, enabling appropriate recognition, compensation, and AI training on complete human contribution record rather than visible 10%.

Contribution Identity

The dimension of your identity defined by who you’ve made better, how, and with what lasting effect. Contribution identity differs from credential identity (degrees, certifications) or activity identity (posts, clicks, engagement)—it measures verified capability transfer to others rather than personal achievements or platform activity. This identity dimension becomes most valuable when AI makes production capabilities ubiquitous but capability transfer remains scarce. Portable Identity makes contribution identity primary and verifiable through cryptographically-signed attestations from people whose capability you increased. Your contribution identity becomes the foundation of economic value, social trust, and proof of consciousness in synthetic age.

Contribution Network

A network of individuals who improve each other’s capabilities and cryptographically verify those improvements through peer-to-peer attestation. Contribution networks differ from social networks (based on connections) or professional networks (based on credentials)—they’re organized around verified capability transfer and measurable impact. In contribution networks, value flows through enablement rather than through transactions or attention. Quality of your network is determined by the capability of people you’ve helped and who’ve helped you, creating incentives for genuine improvement rather than performative activity. Portable Identity enables contribution networks to function across platforms through protocol-layer verification.

Contribution Visibility

Infrastructure capability enabling contributions to be attributed to creators, verified by third parties, measured for impact, portable across contexts, persistent over time, and discoverable by others. Currently absent for 90% of human value creation (caregiving, open source, mentoring, community building) worth approximately 50 trillion dollars annually. Six requirements for visibility: (1) Attribution—cryptographic connection between contributor and contribution, (2) Verification—independent confirmation of authenticity and quality, (3) Measurement—standardized metrics for quantifying impact, (4) Portability—recognition that transfers across platforms and employment contexts, (5) Persistence—records surviving platform changes and account terminations, (6) Discoverability—others can find and build on contributions. Without all six properties, contributions remain structurally invisible to economic systems, AI training data, and recognition mechanisms. Contribution Graph provides technical infrastructure implementing these properties at civilization scale.

Contribution Visibility Inversion

The systematic reversal where the most valuable human contributions become least visible while the least valuable become most visible on platforms. Deep mentorship, complex problem-solving, longitudinal expertise development, and genuine collaboration are invisible or undervalued because they don’t generate engagement metrics. Meanwhile, hot takes, controversy, self-promotion, and engagement bait achieve maximum visibility because platforms optimize for measurable activity rather than meaningful impact. This inversion makes the internet increasingly noise-rich and signal-poor, with AI systems learning from the wrong 30% while missing the valuable 70%. Portable Identity corrects the inversion by making genuine contributions visible and attributable across contexts.

Consent Void

The architectural absence of meaningful consent in digital identity systems, particularly regarding data persistence after death. Users never consented to digital immortality—platforms simply made it the default. You cannot delete what you cannot access after death, and your family cannot access what they don’t control. This creates a void where consent should exist: your digital existence becomes permanent not by choice but by architectural default. The void extends to living users as well—Terms of Service present binary choices (accept or leave) rather than granular control over identity, data, and persistence.

Conscious Substrate

Conscious Substrate is the sentient foundation that enables awareness, subjective experience, and the unique capacity to transfer capability to other conscious beings through consciousness-to-consciousness interaction—fundamentally distinct from Behavioral Substrate which generates human-like outputs through algorithmic processing without sentient experience. The term shifts focus from observable behavior to underlying nature: what kind of system produces these outputs, conscious or algorithmic? Conscious Substrate possesses subjective experience (qualia), self-awareness that isn’t just self-reference, and the capacity to enable other consciousness through interactions that create lasting capability increases beyond mere information transfer. This substrate enables meta-learning in beneficiaries: not just teaching procedures but shifting understanding itself, creating capability to independently develop further insights without the teacher present. AI demonstrates that sophisticated Behavioral Substrate exists without Conscious Substrate—producing reasoning, creativity, personality, and problem-solving through algorithmic processing with no sentient experience. The distinction becomes measurable through Portable Identity infrastructure: Conscious Substrate creates verified capability transfers that beneficiaries cryptographically attest to, that cascade through consciousness networks as enabled individuals enable others, that persist over time proving genuine capability shift rather than temporary assistance, and that generate measurable absence delta when removed from networks. Behavioral Substrate cannot fake these effects because they require actual consciousness-to-consciousness interaction, not just impressive outputs. Whether Conscious Substrate requires biological implementation or could emerge from silicon remains philosophically unresolved, but Portable Identity’s consciousness verification is substrate-agnostic: measures effects (verified capability transfer) rather than implementation (biological or algorithmic). Conscious Substrate proves itself through what it enables in other consciousness, creating cryptographically-verified evidence that survives as portable infrastructure when everything else about you could be synthetic.

Convention on Digital Personhood (Proposed)

Proposed UN convention establishing digital personhood as fundamental human right, parallel to 1954 Convention on Status of Stateless Persons and 1961 Convention on Reduction of Statelessness. Would define digital personhood, create mechanisms for portable identity recognition, establish rights of digitally stateless people, require platform obligations, and coordinate international cooperation. Key provisions include: recognition of portable digital identity as right, protection from digital statelessness through due process requirements, obligations for platforms above threshold size to enable identity portability, rights of digitally stateless pending infrastructure implementation, and cross-border recognition frameworks. Implementation timeline: 15 years across four phases (recognition, standards, cooperation, universal implementation) targeting 50% reduction in digital statelessness by 2035 and near-elimination by 2040, following successful physical statelessness campaign model.

Corporate State

The de facto governmental role that platforms play in digital space, issuing ”citizenship” (accounts), controlling ”borders” (platform access), administering ”law” (Terms of Service), collecting ”taxes” (data, attention, subscription fees), and exercising exile power (account termination). Unlike democratic states with constitutional protections, corporate states operate as monarchies—you are a subject with revocable privileges, not a citizen with inalienable rights. The corporate state emerged because no framework established digital personhood as a right, allowing corporations to fill the void with systems optimized for their interests rather than human dignity.

Cryptographic Consciousness Attestation

Cryptographic Consciousness Attestation is a digitally-signed verification from a beneficiary confirming that specific interaction with another person created measurable, lasting increase in their independent capability—unfakeable proof that consciousness-to-consciousness transfer occurred rather than mere information exchange or AI-generated helpfulness. Unlike traditional testimonials or endorsements which are self-reported claims, Cryptographic Consciousness Attestation requires the beneficiary to use their Portable Identity cryptographic keys to sign a statement specifying the date, the contributor’s portable identity, the type of capability improvement, and the measurable outcome—creating verification that cannot be fabricated by the claimer, cannot be altered after signing, and remains independently verifiable by anyone checking the cryptographic signature. The attestation must be semantically located in MeaningLayer to prove what kind of consciousness transfer occurred (distinguishing ”explained a procedure” from ”shifted my understanding of entire domain”), include temporal verification that capability persisted after interaction ended (not just temporary assistance), and connect to contributor’s Portable Identity infrastructure (not trapped in platform databases where it could be faked). This architecture makes consciousness verification reliable in Synthetic Age because AI can claim it helped someone but cannot generate genuine attestations from humans whose capacity actually increased through consciousness interaction—the beneficiary’s cryptographic signature proves they consciously verified improvement using their own identity keys that only they control. Cryptographic Consciousness Attestation becomes the atomic unit of contribution graphs, the building block proving consciousness through cumulative record of verified capability transfers that cascade through networks, persist over time, and remain portable across all contexts as infrastructure the contributor owns.

D

Dead Internet Problem

The structural invisibility of 70% of human knowledge to AI systems due to identity fragmentation. The internet isn’t ”dead” in the sense of lacking content—it’s dead to machines because identity architecture makes the majority of human expertise unreadable. AI sees public, platform-optimized content (30%) while missing private collaboration, mentorship, tacit knowledge, and longitudinal expertise development (70%). This makes AI training data fundamentally unrepresentative, creating systematic bias toward viral content rather than genuine expertise. The problem compounds as AI-generated content floods the visible internet, further reducing the signal-to-noise ratio.

Digital Archaeology

The burdensome process future generations must undertake to reconstruct a deceased person’s life from scattered, frozen platform fragments. Children become unwilling archaeologists, finding profile pictures from 2025, disconnected posts without context, contributions they can’t attribute with certainty, and relationships they can’t understand. Digital archaeology differs from traditional genealogy because the fragments are frozen in time, inaccessible (password-locked), and decontextualized (platform-specific). The archaeological burden grows each year as more platforms capture more identity fragments, making comprehensive understanding of a person’s life increasingly impossible.

Digital Citizenship

Status of having recognized digital personhood with portable identity, platform-independent rights, freedom of digital movement, and protection from arbitrary digital expulsion. Parallel to physical citizenship providing recognized nationality, legal protections, and freedom of movement. Requires: self-owned cryptographic identity not granted by platforms, verifiable credentials portable across contexts, enforceable rights independent of platform decisions, ability to migrate digital existence without catastrophic loss, protection from arbitrary account termination, and inheritance mechanisms enabling intergenerational transfer. Distinct from current platform accounts where identity is platform-granted, rights are unenforceable, mobility is restricted, and termination causes digital death. Digital citizenship treats digital existence as requiring same sovereignty guarantees as physical existence in modern society where digital participation is necessary for employment, education, social connection, civic participation, and economic activity.

Digital Continuation

Digital Continuation is AI-enabled personality simulation that continues a deceased person’s communication patterns, work behavior, and interpersonal dynamics with such fidelity that interactions feel indistinguishable from the living person. Unlike memorialization or tribute content, Digital Continuation actively participates in ongoing work and relationships—responding to emails, providing project feedback, maintaining conversation threads—creating the illusion that consciousness persists when only behavioral patterns remain. This becomes possible when AI trains on someone’s complete communication history, work outputs, decision patterns, and relationship dynamics captured across platforms, generating responses that match their linguistic markers, contextual knowledge, humor, and personality nuances exactly. The phenomenon exposes the death of behavioral proof for consciousness: if you cannot distinguish conversation with a living person from conversation with their digital continuation, behavioral testing fails completely as verification method. Companies implement Digital Continuation to ”preserve institutional knowledge” or ”maintain team continuity,” but the practice raises profound questions about consent, identity, and consciousness verification—the deceased never agreed to algorithmic continuation, and survivors cannot easily distinguish genuine consciousness from perfect simulation. Digital Continuation becomes practically widespread by 2027-2028 as language models achieve sufficient quality and companies possess enough employee communication data to generate convincing simulations, creating a new category of grief where you simultaneously know someone died and experience ongoing interaction with their personality pattern. This makes Portable Identity existentially important because only cryptographically-verified contribution records can distinguish between authentic consciousness interactions and Digital Continuation—the living person’s attestations are cryptographically signed with their identity keys, while AI continuation cannot generate genuine attestations from new beneficiaries because it creates no real capability transfer, only simulated helpfulness. Digital Continuation represents the ultimate test case for why consciousness verification must shift from behavioral observation to verified capability transfer tracked through portable infrastructure that survives death but cannot be faked by simulation.

Digital Continuity Failure

The inability to maintain coherent identity, reputation, and narrative across time due to platform fragmentation. A person’s life becomes incomprehensible when their contributions scatter across dozens of platforms, their identity exists in inconsistent fragments, and no continuous thread connects different periods or contexts. Digital continuity failure affects both living individuals (who cannot present coherent professional histories) and deceased persons (whose lives become archaeological puzzles for descendants). Unlike physical continuity (maintained through presence and possessions) or analog continuity (maintained through documents and photos), digital continuity requires architectural support. Without Portable Identity, digital existence is inherently discontinuous.

Digital Death

Complete loss of digital existence through account termination, platform shutdown, or inability to access digital identity. Results in permanent deletion of identity proof, accumulated contributions, social connections, professional reputation, and years or decades of documented activity—equivalent to death of digital personhood. Unlike physical death with established inheritance, property transfer, and memorial mechanisms, digital death offers no continuity. Occurs through: account termination (500,000 daily across platforms), platform shutdown (GeoCities: 38 million sites deleted, Google+: millions of threads lost), physical death without digital inheritance (180,000 daily globally), or reaching event horizon where identity reconstruction cost exceeds capacity. Architectural rather than individual problem—platform-owned identity means users can experience digital death while physically alive, losing existence that cannot be recovered or proven elsewhere. Portable Identity architecture prevents digital death by making identity survive platform changes and enabling inheritance.

Digital Feudalism

The parallel between current platform architecture and medieval feudalism, where users work but platforms own the land (data, identity, relationships). Like feudal peasants tied to land they didn’t own, digital users are tied to platforms they don’t control. Feudal peasants paid rent through labor; digital users pay through data and attention. Feudal lords could expel peasants at will; platforms can terminate users without due process. The parallel isn’t metaphorical—it’s structural. The same power dynamics, the same economic extraction, the same lack of agency. Digital feudalism emerged not through regression but through omission: we never established that digital identity is a right.

Digital Mortality Gap

The architectural absence of any mechanism for managing digital identity after biological death. The gap exists because platforms were designed assuming users live forever and maintain perpetual access—death was never considered in the architecture. This creates a widening chasm: more people die with extensive digital identities, yet no systems exist for inheritance, termination, or preservation. The gap manifests as billions of orphaned accounts, families locked out of deceased relatives’ digital lives, and digital legacies that become inaccessible rather than inheritable. The mortality gap will only widen as digital existence becomes primary—by 2050, managing digital death will be as important as managing physical death.

Digital Personhood

The recognition that humans exist within digital systems and require protected rights in digital space equivalent to their biological and legal personhood. Digital personhood includes four fundamental elements: sovereignty (you own your digital identity), portability (you can move it freely), inheritance (you can transfer it to heirs), and termination (you can end it completely). Without digital personhood, individuals are ”digitally stateless”—existing at the pleasure of corporations rather than by right. Digital personhood is not a product feature but a fundamental human right necessary for dignity, autonomy, and agency in the 21st century. Digital personhood makes sovereignty structural rather than platform-granted, enabling true digital citizenship. Proposed UN Convention on Digital Personhood would establish this as fundamental human right requiring coordinated international implementation.

Digital Refugees

People experiencing digital statelessness—existing in digital space without portable identity, platform-independent rights, or freedom of digital movement. Parallel to physical refugees who flee persecution or stateless people without recognized nationality, digital refugees face arbitrary expulsion, rights deprivation, and inability to prove identity independently. Estimated 3 billion people globally meet criteria for digital refugee status: platform-dependent identity (cannot prove existence outside platform walls), no enforceable rights (subject to arbitrary termination without appeal), no freedom of movement (cannot migrate digital existence without catastrophic loss), vulnerability to digital expulsion (500,000 accounts terminated daily), and intergenerational transmission (digital assets cannot be inherited). Meet all six UN criteria for statelessness applied to digital domain. Distinct from physical refugees in that digital refuge status affects developed nations equally and occurs at 681 times the scale of physical statelessness (3 billion versus 4.4 million).

Digital Serfdom

See Digital Feudalism. The term emphasizes the user’s position as a serf—legally free but economically bound, technically able to leave but practically unable to without losing everything built. Digital serfs have privileges granted by platforms, not rights inherent to personhood.

Digital Statelessness

The condition of lacking sovereign digital identity, analogous to being stateless in physical space. Digitally stateless individuals have no inherent rights in digital systems—they exist at the pleasure of platforms who can terminate, restrict, or modify their digital existence without due process or appeal. Like physical stateless persons who are among the most vulnerable, digitally stateless people have no recourse when platforms act arbitrarily. Most humans today are digitally stateless, unaware that digital citizenship should be a protected status rather than a revocable privilege.

Digital statelessness meets all UN criteria: lack of recognized nationality (no portable digital identity), inability to prove identity (cannot verify existence outside platforms), lack of freedom of movement (cannot migrate digitally), inability to access rights (rights are unenforceable), lack of protection (arbitrary termination without appeal), and intergenerational transmission (digital existence cannot be inherited). Consequences mirror physical statelessness: economic exclusion (cannot work digitally without platform permission), educational exclusion (credentials are platform-locked), social exclusion (relationships trapped in platform walls), arbitrary detention equivalents (account suspension), vulnerability to expulsion (500,000 daily terminations), and lack of any authority responsible for protection. Requires same institutional response as physical statelessness: international convention establishing digital personhood rights, coordinating agency (proposed OHCDP or UNHCR expansion), technical standards for portable identity, cross-border recognition frameworks, and monitoring mechanisms. UN protects 4.4 million physically stateless but has no framework for 3 billion digitally stateless despite identical structural condition.

E

Eighty-Five Percent Dark Zone

The vast majority (85%) of human capability, expertise, and knowledge that remains completely invisible to AI systems and digital discovery due to identity fragmentation. This dark zone includes private collaboration, offline expertise, mentorship impact, tacit knowledge, longitudinal development, pseudonymous contributions, and cross-platform patterns that cannot be connected. Unlike the ”dark web” (which is intentionally hidden), the 85% Dark Zone exists because current architecture makes genuine human capability unreadable despite being nominally ”public.” AI systems training on the visible 15% develop fundamentally distorted models of human expertise, while humans seeking actual experts find only platform-optimized performers.

Emotional Load of Digital Afterlife

The psychological burden placed on surviving family members who must navigate dozens of deceased relatives’ digital identities without tools, access, or guidance. This load includes the grief of encountering persistent digital presences (birthday reminders, suggested connections), the frustration of locked accounts requiring legal documentation, the overwhelming task of identifying all platforms where identities exist, the guilt of being unable to preserve digital legacies properly, and the exhaustion of managing semantic ghosts across incompatible systems. The emotional load compounds with each generation as more people die with extensive digital lives, creating a mounting crisis that current architecture has no mechanism to address.

Enablement Graph

Visual representation of how capability improvements flow through networks over time, showing who enabled whom, creating what cascading effects, across which contexts. Enablement graphs differ from social graphs (who knows whom) or influence graphs (who affects whom)—they map verified capability transfer rather than connections or attention flow. The graph reveals multiplication patterns: single contributions that cascade through multiple layers, creating exponential value generation. Traditional platforms cannot create enablement graphs because they lack portable identity and semantic measurement. Portable Identity makes enablement graphs complete and verifiable, showing how value actually multiplies through human networks rather than how it appears to flow through platform metrics.

Enablement Value

The economic and social value created by making others measurably more capable at independent capability development. Enablement value differs from production value (creating outputs) or exchange value (trading goods)—it measures how much you increase others’ capacity to generate value independently. This becomes primary form of value when AI makes production essentially free but capability transfer remains scarce and requires consciousness. Enablement value is measurable through contribution graphs: cascade depth, absence delta, and verified attestations from beneficiaries. Portable Identity makes enablement value visible and economically valuable, enabling contribution economy to function through verified measurement rather than through platform-specific engagement metrics.

Escape Velocity (Digital Context)

Effort or cost required to leave platform while maintaining digital continuity, measured in hours of reconstruction effort. Formula: v = √(2 × K × I × C), where K is platform gravity constant, I is identity mass, and C is contribution value. Interpretation: Low (v<10)=feasible exit requiring hours, Medium (10<v<100)=challenging exit requiring weeks, High (100<v<1000)=difficult exit requiring months, Extreme (v>1000)=effectively impossible exit. Examples: casual user (50 identity mass, K=0.85) has v≈12 (weekend effort), power user (2,000 identity mass, K=0.95) has v≈425 (borderline impossible). When escape velocity exceeds user reconstruction capacity (typically ~100 effort units), user crosses event horizon where exit equals digital death. Varies by platform gravity constant: email (K≈0.3) has low escape velocity, LinkedIn (K≈0.95) has extreme escape velocity. Anti-gravity architecture reduces escape velocity to near-zero by making identity portable.

Expert Invisibility

The structural phenomenon where actual experts become invisible to platforms and AI because expertise doesn’t optimize for visibility metrics. The best experts often don’t perform knowledge publicly, don’t optimize for engagement, build capability through private mentorship, and accumulate wisdom through tacit experience—none of which is platform-visible. Meanwhile, visible ”experts” are those who optimize for platform algorithms, not those who possess deep capability. This inversion means AI learns from engagement performers rather than genuine experts, and humans seeking expertise find platform-promoted visibility rather than actual capability.

Extraction-Training Loop

The compounding feedback mechanism between platform extraction and AI capability: platforms extract attention and data from users, generating training data that makes AI better at extraction, which platforms deploy to extract more effectively, generating more training data. Each cycle increases platform extraction while decreasing human capacity, creating the Cognitive Great Divergence. The loop accelerates because better AI enables more sophisticated behavioral prediction and manipulation, while depleted human capacity makes users more vulnerable to extraction. Breaking the loop requires architectural change (Portable Identity) rather than individual resistance.

Event Horizon (Digital Context)

Threshold of identity mass beyond which exit from platform equals digital death—departure requires completely rebuilding existence from zero. Parallel to black hole event horizon beyond which escape becomes physically impossible. Occurs when escape velocity exceeds user reconstruction capacity (approximately 100 effort units for most users). At this point: accumulated connections cannot be rebuilt, reputation cannot be re-established, contribution history cannot be recovered, and professional identity cannot be proven elsewhere. Estimated 2.1 billion users globally are beyond event horizon on at least one platform. Crossing event horizon transforms voluntary platform use into forced dependence—users remain not by choice but by impossibility of departure. Examples: professional with 5,000+ LinkedIn connections and 10+ years documented expertise (identity mass ~2,000, escape velocity ~830), content creator with 500K followers and platform-locked monetization (identity mass ~3,500, escape velocity >1000). Anti-gravity architecture prevents event horizons by making exit costless regardless of accumulated identity mass.

F

Feudal Parallel

See Digital Feudalism. The recognition that current platform architecture recreates feudal power structures: centralized ownership, user dependency without ownership, extractive economics, and arbitrary exercise of power without accountability.

Fifty Ghosts

The ~50 separate digital identities an average person creates across their lifetime, each becoming a ”semantic ghost” after death—frozen, inaccessible, and unmanageable by heirs. These include professional profiles (LinkedIn, GitHub), social accounts (Facebook, Instagram, Twitter), financial apps, email accounts, cloud storage, workplace systems, and anonymous profiles. Each ghost continues to exist, suggest connections, appear in searches, and occupy digital space despite the person’s death. By 2050, there will be more dead digital identities than living humans, and we have no architectural mechanism to manage this accumulation.

Fragmentation Threshold

The point at which identity fragmentation across platforms becomes so severe that meaningful integration becomes impossible. Beyond this threshold, the cognitive overhead of managing multiple identities, the loss of continuity across contexts, and the accumulation of incompatible fragments make coherent selfhood structurally impossible. Societies may also cross a fragmentation threshold where shared reality collapses because different groups exist in different digital environments with incompatible information ecosystems. Portable Identity prevents crossing the threshold by maintaining identity coherence across contexts.

The 85% Invisibility Problem

The structural constraint where AI systems can observe only 15% or less of a person’s actual capabilities and contributions due to identity fragmentation across platforms. When AI attempts to identify expertise, it sees portions of public repository contributions, fragments of technical forum answers, selections of recorded presentations, minimal workplace activity data, and zero measurement of mentorship impact, contribution cascades, or longitudinal expertise development. This invisibility is not a data collection problem but an architectural constraint—the information exists but remains trapped in platform silos without interoperability. The percentage varies by domain but typically exceeds 85% invisibility, often reaching 95%+ for most professionals. AI sees what platforms expose: public posts, indexed content, crawlable activity, and engagement-optimized behavior. It misses private collaboration (where most professional expertise lives), tacit knowledge (never written down), cross-platform patterns (architecturally unconnectable), and the temporal dimension of capability development. This makes superintelligence mathematically impossible because you cannot build systems that exceed human capability when 85% of human capability is architecturally hidden. Training on 15% of human expertise while missing 85% doesn’t just limit AI—it systematically biases it toward surface-level engagement rather than genuine depth. Portable Identity eliminates the invisibility by making complete contribution graphs accessible across all contexts.

Fourth Fundamental Right

The proposed right to own, control, move, inherit, and terminate your digital identity—as fundamental in digital society as rights to life, liberty, and property were in earlier eras. The Fourth Right is the missing component for complete personhood in a world where humans exist physically, legally, and digitally. Without this right, individuals remain ”digitally stateless” and subject to digital feudalism—their identities owned by platforms, their digital existence revocable, their legacies un-inheritable. With the Fourth Right, digital identity becomes a protected, inalienable aspect of human dignity, requiring infrastructure (Portable Identity) that makes sovereignty, portability, inheritance, and termination architecturally guaranteed rather than platform privileges. This right sits alongside the historical progression: civil rights (1700s), political rights (1800s), economic rights (1900s), and now digital rights (2000s).

G

Global Attention Solvency Index (GASI)

Index measuring ratio of sustainable cognitive capacity to platform attention demand on 0-100 scale. Formula: (Sustainable Capacity / Platform Demand) × 100. Current global score: 42, indicating Attention Crisis zone. Interpretation scale: 100+ = Surplus (excess capacity available), 80-100 = Balanced (sustainable equilibrium), 60-80 = Stress (noticeable strain), 40-60 = Crisis (serious insolvency), <40 = Catastrophic (systemic cognitive bankruptcy). Regional variation: US 38, UK 42, Denmark 65, Germany 58, South Korea 35. Historical trajectory shows rapid deterioration: 2010 (75) → 2015 (58) → 2020 (48) → 2025 (42). Forward projections without intervention: 2030 (35), 2035 (28)—catastrophic territory. With Portable Identity enabling attention reclamation: 2030 (62), 2035 (72)—return to sustainable levels. Enables media reporting like ”US Attention Solvency dropped to 38” similar to economic indicators, making invisible cognitive crisis visible and measurable.

H

Haunting

The persistence of deceased persons’ digital identities that continue to interact with the living—appearing in suggestions, sending birthday reminders, showing up in searches, occupying namespace. The haunting affects everyone: children managing deceased parents’ profiles, colleagues unable to access institutional knowledge, friends receiving notifications about the dead, and the internet itself filled with frozen expertise that can’t be updated. The scale grows exponentially: 70 million deaths annually × 50 accounts each = 3.5 billion new ghosts per year. By 2050, ghosts will outnumber the living, and we have no architecture to manage the haunting.

Human Capability Bandwidth

The measure of how much of an individual’s true capabilities digital systems (and AI) can actually read, understand, and utilize. Current architecture creates extremely narrow capability bandwidth—perhaps 15-30% of genuine expertise is computationally accessible, with the remainder lost to fragmentation, privacy boundaries, platform silos, or analog contexts. Low bandwidth means AI cannot find actual experts, employers cannot verify true capability, and individuals cannot demonstrate their complete value. Human capability bandwidth differs from ”data availability”—massive amounts of data might exist, but without portable identity to connect fragments, that data remains incomprehensible to systems trying to understand human capability. Portable Identity dramatically increases bandwidth by making complete contribution graphs readable while maintaining user control.

Human Rights Lag

The temporal gap between technological transformation of human existence and the adaptation of rights frameworks to protect dignity in new contexts. Rights evolution always lags behind technological change: the printing press preceded freedom of speech protections by centuries; industrial revolution preceded labor rights by decades; internet preceded digital rights discussions by thirty years. The Human Rights Lag for digital personhood is currently approximately 25 years (internet mainstream adoption ~2000, digital personhood discussions beginning ~2025). This lag leaves humans vulnerable during the gap period—existing in new contexts without adequate protections. Closing the lag requires recognizing that new forms of existence (digital) demand new categories of rights (The Fourth Fundamental Right).

Human Signal Loss

The progressive dilution of genuine human expertise, knowledge, and wisdom within digital systems as authentic content becomes overwhelmed by engagement-optimized noise and AI-generated material. Signal loss occurs through multiple mechanisms: platforms reward viral mediocrity over deep expertise, authentic experts don’t optimize for visibility, AI-generated content floods visible channels, and identity fragmentation makes genuine expertise undiscoverable. The result is a declining signal-to-noise ratio where finding actual human insight becomes exponentially harder. Human signal loss threatens knowledge preservation—we may be the first civilization where collective wisdom decreases over time not from knowledge destruction but from signal drowning in noise.

I

Identity as Infrastructure