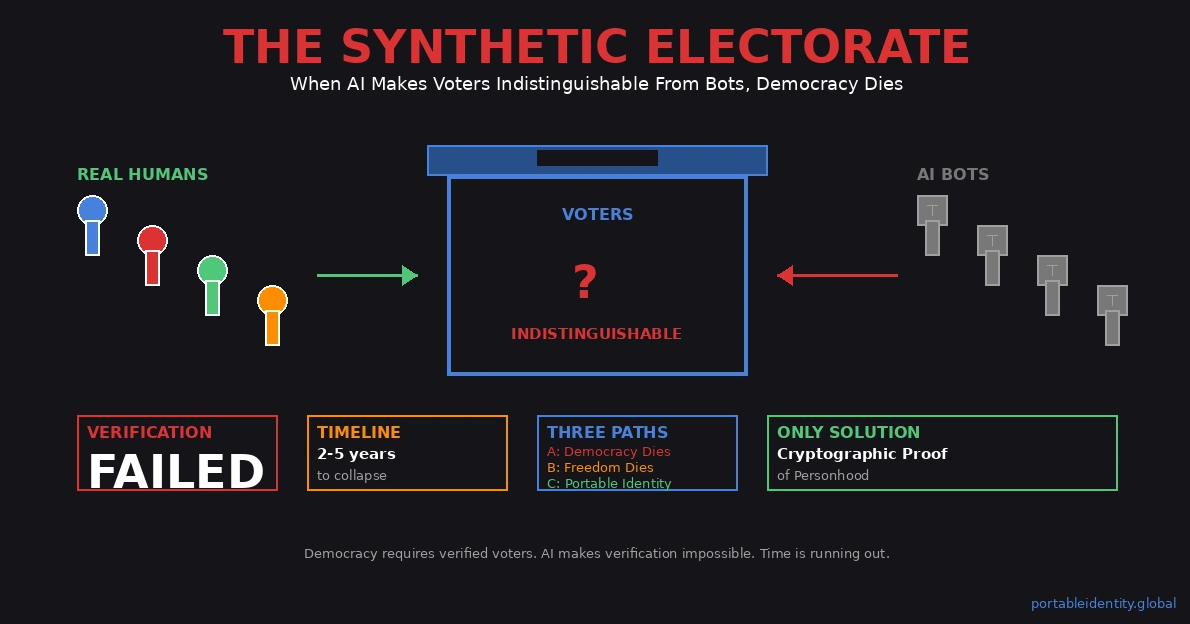

When AI Makes Voters Indistinguishable From Bots, Democracy Dies

Democracy has one non-negotiable requirement.

Not universal suffrage. Not separation of powers. Not free speech or independent courts—though all of these matter.

The foundational requirement: the ability to verify that voters are real humans, each voting once.

One person, one vote. This is not merely principle. This is physical constraint that makes democracy mathematically coherent.

Remove the ability to verify voters, and democracy becomes theater. The forms persist—elections held, ballots counted, winners announced—but the underlying mechanism fails. You cannot aggregate human preference if you cannot verify which signals come from humans.

For two centuries, this verification was straightforward: physical presence at polling location, verification through direct observation, paper ballots hand-counted.

Digital systems introduced complexity but maintained verification through identity documents, signature matching, registration databases cross-referenced against population records.

Imperfect, but functional. The verification worked because forging identity at scale was difficult.

Then AI achieved perfect simulation.

And democracy’s verification infrastructure—every method used to distinguish real voters from fake—collapsed simultaneously.

This is not theoretical future risk. This is present crisis accelerating toward criticality.

The Synthetic Electorate already exists. The question is not whether AI can simulate voters convincingly enough to compromise elections. The question is: at what percentage of synthetic participation does democracy become mathematically unworkable?

And the answer, from information theory and democratic theory combined: much lower than most people think.

This article establishes why all current voter verification methods fail under AI, why the timeline to total verification collapse is 2-5 years, and why Portable Identity represents the only architectural solution that preserves both democracy and freedom.

I. THE DEMOCRATIC CONSTRAINT

Before proceeding, precision about what democracy requires mathematically.

Democratic governance is aggregation mechanism. It collects preferences from population, aggregates them according to agreed rules, and produces collective decision.

For this mechanism to function, several conditions must hold:

Condition 1: Uniqueness verification

Each participant must be verifiable as single, distinct individual. If one person can vote multiple times, aggregation becomes meaningless—the ”majority” might represent minority manipulating the counting.

Condition 2: Eligibility verification

Each participant must be verifiable as member of the demos—the group whose preferences democracy aggregates. If non-members can vote, the mechanism aggregates wrong population’s preferences.

Condition 3: Authenticity verification

Each vote must be verifiable as coming from the claimed individual, not from someone impersonating them. If votes can be faked, aggregation computes on corrupted data.

Condition 4: Secrecy preservation

Vote content must remain secret while identity is verified. If votes can be tied to individuals, coercion becomes possible, corrupting the authenticity of expressed preferences.

Together, these conditions create what democratic theorists call ”the electoral integrity constraint”: the mathematical properties that make preference aggregation function as intended.

Historical democracies satisfied these conditions through physical verification: you appeared in person, officials recognized you or checked documentation, you voted in private booth, your physical presence guaranteed uniqueness (cannot be in two places simultaneously).

Digital democracy attempted to maintain these conditions through:

- Identity verification (documents, biometrics, signature matching)

- Registration databases (prevent double-voting)

- Behavioral verification (voting patterns, account history, activity signals)

- Social graph verification (connections to known real individuals)

This worked—barely—for three decades.

Then AI crossed the simulation threshold.

II. THE VERIFICATION CASCADE FAILURE

Every verification method democracy currently uses fails under AI. Not ”becomes less reliable.” Fails completely.

Verification Method 1: Document Authentication

Traditional approach: voters present government-issued ID, officials verify document authenticity, compare photo to person.

AI defeat mechanism: Perfect document forgery through generative models. AI systems now create identity documents indistinguishable from legitimate ones—correct fonts, security features, holograms visible in generated images. Image generation has reached quality levels where synthetic IDs pass expert examination in documented cases.

More critically: online verification cannot examine physical document. Digital systems verify through image analysis. AI-generated document images are informationally perfect.

Result: Document verification provides zero information about whether voter is real human.

Verification Method 2: Biometric Verification

Traditional approach: facial recognition, fingerprint scans, iris recognition—biological features AI supposedly cannot fake.

AI defeat mechanism: Deepfakes and synthetic biometric generation. Modern generative adversarial networks produce faces that fool commercial facial recognition systems at rates exceeding 90% in documented tests. Fingerprint synthesis from high-resolution photos has been demonstrated in academic research. Iris pattern generation techniques exist in published literature.

The biometric features democracy uses for verification are now generatable at will.

Result: Biometric verification provides zero information about whether voter is real human vs AI-generated synthetic identity.

Verification Method 3: Behavioral Verification

Traditional approach: analyze voting history, online behavior, activity patterns. Real humans have behavioral signatures—irregular timing, contextual responses, social graph integration. Bots have detectable patterns.

AI defeat mechanism: Perfect behavioral simulation. Modern language models maintain consistent personas across months of interaction, demonstrate contextual awareness indistinguishable from human understanding, build authentic-seeming relationships, post at human-like intervals with appropriate emotional range.

The behavioral signals platforms use to distinguish human from bot are now replicable by sophisticated AI systems.

Result: Behavioral verification provides zero information about whether voter is conscious human vs advanced AI agent.

Verification Method 4: Social Graph Verification

Traditional approach: verify voters through network connections to other verified real humans. Bots have suspicious connection patterns—following many, few followers, no mutual connections with verified accounts.

AI defeat mechanism: Coordinated networks of AI agents that interact authentically with each other and with real humans, accumulating genuine followers over time, building social proof that appears legitimate to graph analysis algorithms.

The social signals democracy uses for verification are now manufacturable at scale.

Result: Social graph verification provides zero information about whether voter is real human in authentic network vs AI agent in coordinated synthetic network.

The Information-Theoretic Reality

Shannon proved (1948): when channel noise exceeds signal power, no amount of filtering recovers the original message.

Democracy’s verification channels have crossed that threshold. AI-generated synthetic voters create noise indistinguishable from signal (real voters). The information needed to distinguish real from fake no longer exists in the observables democracy can measure.

This is not ”verification got harder.” This is verification became physically impossible through observation-based methods.

And the implications are existential.

III. WHY THIS IS NOT VOTER FRAUD

Before examining evidence of synthetic participation, critical distinction must be made:

Traditional voter fraud is individual-scale deception: fake registrations, double-voting, impersonation. These are detectable through pattern analysis, database cross-referencing, statistical anomalies in precinct-level data. Fraud leaves traces because it operates within verification systems that generally work.

Synthetic electorate is verification-system collapse: the infrastructure used to distinguish real voters from fake becomes informationally saturated with perfect noise. This is not fraud evading detection. This is detection becoming physically impossible.

The difference is fundamental:

Fraud is signal corruption (some bad data enters good verification system). Synthetic electorate is channel collapse (verification system cannot extract signal from noise).

Democracy doesn’t fail because fraudulent ballots appear. Democracy fails when verification becomes mathematically impossible under conditions of perfect simulation—when you cannot determine which participants are real humans because AI generates signals indistinguishable from human-generated signals.

This is why traditional anti-fraud measures (better ID checks, signature verification, database reconciliation) provide zero protection against synthetic electorate. They attempt to filter signal from noise when the fundamental problem is: noise has become informationally identical to signal.

The threat is not individuals cheating within working system. The threat is system’s verification capability collapsing completely.

IV. THE SYNTHETIC ELECTORATE IS ALREADY HERE

This is not future scenario. This is present reality accelerating toward criticality.

Evidence: Coordinated Inauthentic Behavior at Scale

Major platforms report removing billions of accounts quarterly for violating authenticity policies. These represent the detectable cases—crude automation with obvious behavioral patterns. Sophisticated AI-operated accounts exhibiting human-like behavior remain undetected because they are indistinguishable from legitimate users through behavioral observation.

Academic research using network analysis techniques estimates 10-15% of political discourse on major platforms originates from coordinated inauthentic behavior. This represents conservative estimate measuring only the detectably automated activity.

State actors deploy AI-operated accounts that build authentic histories over years—posting consistently, accumulating genuine followers, engaging contextually—then activate for coordinated messaging during elections. Platform detection systems cannot identify these through behavioral analysis because the behavior appears authentic by every measurable criterion.

Evidence: Deepfake Political Impact

Deepfake videos of political figures generate millions of views before fact-checking organizations identify them as synthetic. By the time verification occurs, the content has propagated widely, influenced opinion formation, and created false memories in significant portions of the electorate.

Voice synthesis technology enables phone banking operations where ”candidate calls” sound perfectly authentic. Text generation creates constituent correspondence indistinguishable from human-written communication. Political campaigns face verification crisis: which supporter messages represent real humans, which are synthetic? No reliable methodology exists for distinction.

Evidence: Manufactured Grassroots Movements

AI-generated ”grassroots” campaigns emerge with thousands of apparent supporters—social media profiles with years of post history, forum engagement, email correspondence—all synthetic but indistinguishable from authentic citizen organization through standard verification methods.

These synthetic movements shape political discourse, influence candidate positions, create appearance of public opinion that may not represent actual human sentiment. Traditional methods for verifying grassroots authenticity (examining social connections, verifying event attendance, analyzing posting patterns) fail because AI simulates these verification signals perfectly.

Evidence: Electoral Manipulation Through Synthetic Participation

Documented cases exist in smaller-scale elections where synthetic accounts influenced outcomes through coordinated messaging, manufactured endorsements, and artificial scandal amplification. Detection occurs only through forensic analysis conducted months after the electoral event, often by academic researchers rather than electoral authorities.

In larger elections with millions of participants, distinguishing synthetic voters from real voters through behavioral observation approaches statistical impossibility given current detection capabilities.

The Percentage Question

Information theory establishes: corrupted signals become unrecoverable well before noise reaches 50% of total signal.

In communication systems, 10-15% noise renders most signals mathematically unrecoverable. In democratic aggregation systems, the threshold appears similar.

If 10-15% of apparent political participants are synthetic, aggregate preference measurements become untrustworthy. The ”will of the people” becomes ”will of the people plus AI-generated simulation of additional people”—and the two cannot be separated through observation.

Evidence suggests we may have crossed this threshold in digital political participation. No reliable methodology exists for determining actual synthetic participation rates, which is itself evidence of verification system failure.

V. THE THREE SCENARIOS

Democracy faces three possible futures. Only one preserves both democratic governance and human freedom.

SCENARIO A: Status Quo (Democratic Death Spiral)

What happens: Democracies continue using current verification methods—documents, behavior, biometrics, social graphs—while AI simulation capability continues improving.

Timeline:

- 2025-2026: Synthetic voter participation reaches 15-25% in digital political spaces

- 2027-2028: Major elections exhibit statistically anomalous patterns suggesting significant synthetic participation

- 2028-2030: Public confidence in electoral outcomes erodes as synthetic manipulation becomes undeniable

- 2030+: Democracy becomes theater—elections held, results announced, but legitimacy destroyed

Why this fails:

As synthetic participation increases, real voters face impossible epistemic situation: they cannot distinguish real public opinion from manufactured opinion. Political discourse becomes hall of mirrors where humans interact with unknown ratio of humans to simulations.

Candidates optimize for AI engagement (measurable through analytics) rather than human preference (unmeasurable given verification failure). Policy positions reflect synthetic sentiment rather than authentic human needs.

Eventually, the question ”who won the election?” becomes unanswerable because the question ”who voted?” has no verifiable answer.

Democracy requires legitimacy. Legitimacy requires that citizens believe elections aggregate real human preferences. When verification fails, belief fails. When belief fails, democracy fails—even if the mechanical process continues.

Probability: High if no intervention. This is default trajectory.

SCENARIO B: Authoritarian Verification (Freedom Dies)

What happens: Governments recognize verification crisis, implement invasive surveillance infrastructure to verify voters biometrically and behaviorally in real-time.

Implementation:

- Mandatory biometric registration (iris scans, facial recognition, gait analysis, keystroke patterns)

- Continuous behavioral monitoring across all digital platforms

- Centralized identity databases linking all online activity to physical person

- Real-time AI systems analyzing behavior for ”authenticity signals”

- Criminal penalties for operating accounts without verified identity link

Timeline:

- 2025-2027: Early adopter nations implement mandatory ”digital ID” systems

- 2027-2029: Democratic nations facing verification crisis adopt similar systems reluctantly

- 2029-2032: Global norm becomes: verified identity required for all digital participation

- 2032+: Democracy survives but privacy dies completely

Why this ”works” (technically):

Total surveillance can distinguish human from AI through continuous monitoring. If government tracks every online action, correlates with offline movement patterns, biometrically verifies each login session, they can prevent synthetic entities from participating in electoral processes.

Democracy’s preference aggregation becomes mathematically valid again because voters are verified as real humans through comprehensive surveillance.

Why this fails (ethically):

The cure kills the patient. Democracy without privacy is democracy in name only.

When governments possess complete surveillance infrastructure—justified initially for election security—that infrastructure inevitably gets used for political control, dissent suppression, opposition targeting.

The same systems that verify you’re not AI also track your political views, associations, private communications, reading habits, social connections. The knowledge that everything is monitored chills speech, constrains behavior, undermines the free political deliberation democracy requires to function.

You save democracy’s mathematical function but destroy its spirit.

Probability: Moderate-to-high in authoritarian states, lower but non-zero in democracies facing acute verification crisis.

SCENARIO C: Portable Identity (Democracy + Freedom)

What happens: Democracies adopt Portable Identity architecture—cryptographic proof of personhood that verifies voters are unique real humans without invasive surveillance.

Implementation:

- Self-sovereign Decentralized Identifiers (DIDs) with private key control

- Zero-knowledge proofs of uniqueness (prove you’re unique person without revealing identity)

- Distributed timeline attestation (prove continuous existence without revealing history)

- Threshold credential systems (prove you’re eligible voter without revealing personal data)

- Privacy-preserving verification (no central database, no single point of surveillance)

How it works:

When you vote, you present cryptographic proof that:

- You control a unique identity (possess the private keys)

- This identity has continuous timeline attestation (existed before election, isn’t newly-created)

- This identity hasn’t voted in this election yet (uniqueness without identification)

- This identity meets eligibility requirements (zero-knowledge proof of citizenship/residency)

All of this happens without revealing WHO you are—only that you’re unique eligible human who hasn’t voted yet.

The Distributed Attestation Mechanism:

Timeline attestation doesn’t rely on single authority. Multiple independent entities (employers, banks, universities, professional organizations) cryptographically sign attestations confirming identity’s continuous existence over time.

No single attestor knows your complete history. Each signs only their interaction with your identity. Together, these distributed attestations prove timeline continuity without creating surveillance infrastructure.

Attestors have incentive to maintain integrity: their reputation depends on signing only legitimate attestations. Collusion becomes difficult because it requires coordinating multiple independent entities with conflicting interests.

Timeline:

- 2025-2026: Portable Identity infrastructure matures, early adoption in low-stakes elections

- 2026-2028: Pilot programs in municipalities demonstrate viability

- 2028-2030: National adoption begins in forward-thinking democracies

- 2030-2035: Becomes global standard as verification crisis becomes undeniable

Why this works:

Cryptographic verification provides what observational verification cannot: unfakeable proof of human uniqueness without surveillance.

AI can generate synthetic identities. AI cannot generate:

- Private keys held by real humans over years

- Continuous timeline attestations from distributed verifiers with reputation at stake

- Zero-knowledge proofs of citizenship verifiable against distributed records

- Cryptographic uniqueness proofs that work without central database

The architecture makes voter verification mathematically sound while preserving ballot secrecy and preventing surveillance infrastructure creation.

Why this preserves freedom:

Identity is self-sovereign—you control your keys, not government. Verification is privacy-preserving—proves you’re eligible without revealing identity. No central database exists—no surveillance infrastructure that can be repurposed. Participation remains pseudonymous—your votes cannot be traced to you.

Democracy functions mathematically (verified preferences) while freedom is preserved (no surveillance, no tracking, no central control).

Probability: Currently lower but increases rapidly as verification crisis becomes obvious and Scenarios A/B demonstrate their failures. This is the only solution that works long-term without sacrificing freedom.

VI. WHY PORTABLE IDENTITY IS THE ONLY ARCHITECTURE THAT WORKS

The challenge is specific: verify voter uniqueness and eligibility without creating surveillance infrastructure.

Traditional solutions fail this challenge because they operate on fundamentally wrong assumption: verification requires observation.

Portable Identity succeeds because it operates on different principle: verification through cryptographic proof rather than behavioral observation.

The Architecture:

Component 1: Self-Sovereign DIDs

Each citizen generates Decentralized Identifier with cryptographic key pair. The identifier exists independently of government—citizen controls private keys, no central authority can revoke or modify identity.

This provides: Uniqueness (each DID is cryptographically unique), Continuity (same DID persists across contexts), Control (citizen owns it, not institution).

Component 2: Distributed Timeline Attestation

Multiple independent parties attest to identity’s continuous existence over time. These attestations are cryptographically signed, timestamped, independently verifiable.

Attestors include: employers (confirming work history), banks (confirming financial relationship), universities (confirming educational enrollment), professional organizations (confirming membership), utility companies (confirming service provision).

No single attestor sees complete history. Each signs only their specific interaction. Together, they prove identity existed continuously over time without creating centralized database.

Attestor incentives: Reputation depends on integrity. Signing false attestations destroys their value as attestor. Market mechanisms encourage honest attestation.

This prevents: Newly-created identities claiming long history, AI-generated identities forging timeline, Sybil attacks through mass identity creation, Central surveillance through single attestor.

Component 3: Zero-Knowledge Eligibility Proofs

Citizen proves they meet voting eligibility (citizenship, residency, age) without revealing personal information. Uses zero-knowledge cryptographic protocols that prove ”I have attribute X” without revealing X’s value or linking to identity.

This provides: Eligibility verification (only citizens vote), Privacy preservation (no data revealed), No surveillance infrastructure (no central database to query).

Component 4: Threshold Credential Systems

Voting credential issued as threshold cryptographic credential—requires cooperation of multiple authorities to create, but no single authority can identify voter or prevent voting.

Example: Credential requires 3-of-5 authorities to issue. Coalition of election officials, international observers, civil society organizations, academic institutions, and judicial oversight must cooperate. Single corrupted authority cannot manipulate system.

This prevents: Single point of failure, Targeted voter suppression, Vote buying/coercion (because vote cannot be proven to third party), Central control by any single entity.

Component 5: Double-Vote Prevention Through Nullifiers

When voting, citizen generates cryptographic nullifier—unique to this election, derived from their DID, but unlinkable to identity. System checks: has this nullifier been used? If yes, reject (double vote). If no, accept and record nullifier.

This provides: Double-vote prevention (nullifier can only be used once per election), Ballot secrecy (nullifier doesn’t reveal identity), No tracking (nullifier unlinkable across elections), Verification without identification.

Why AI Cannot Defeat This:

AI can generate synthetic identities—but they have no continuous timeline attestation from distributed verifiers with reputation at stake.

AI can simulate behavior—but cannot generate valid zero-knowledge proofs of citizenship without access to actual citizenship records and cryptographic infrastructure.

AI can create fake documents—but cannot generate valid threshold credentials without cooperation of multiple independent authorities with conflicting interests.

AI can operate synthetic accounts—but cannot generate valid nullifiers derived from DIDs with proper distributed timeline attestation verified by independent entities over years.

The architecture verifies properties AI cannot fake: cryptographically-proven continuous human existence with distributed verification from entities with reputation incentives for honesty.

VII. THE TIMELINE: 2-5 YEARS BEFORE IRREVERSIBILITY

The window for implementing Portable Identity before verification crisis becomes irreversible is finite and closing.

Phase 1 (2024-2026): Crisis Recognition

Early evidence of synthetic voter participation becomes undeniable through academic research, journalistic investigations, and platform transparency reports. All data sources converge: significant percentage of political participation is synthetic.

Public awareness begins growing. Policy discussions start in think tanks and government committees. But institutional inertia prevents decisive action.

Phase 2 (2026-2028): First Failures

Major elections exhibit statistically anomalous patterns that resist conventional explanation. Post-election forensics reveal synthetic participation at scales that affected electoral dynamics. Public confidence in electoral outcomes begins measurable erosion.

Some nations experiment with authoritarian solutions (mandatory surveillance for voting eligibility). Others begin exploring Portable Identity alternatives in pilot programs.

Phase 3 (2028-2030): Divergence

Democratic nations face binary choice: Scenario B (surveillance state) or Scenario C (Portable Identity).

Early adopters of Portable Identity demonstrate the architecture functions as intended. Late adopters scramble to implement either solution under crisis pressure. Holdouts face acute legitimacy crisis as citizens lose faith in electoral integrity.

Phase 4 (2030+): Lock-In

Whatever solution nations adopt in Phase 3 becomes locked in for decades. Infrastructure is expensive to change. International standards emerge around whichever approach dominates. Network effects make switching costs prohibitive.

If Scenario B dominates: global norm becomes surveillance state infrastructure for electoral participation. If Scenario C dominates: global norm becomes privacy-preserving cryptographic verification.

The Critical Window:

Decisions made 2025-2028 determine which scenario unfolds. After 2030, path dependency makes reversal extremely difficult—both technically (infrastructure already deployed) and politically (institutional interests crystallized around chosen approach).

The time to deploy Portable Identity infrastructure is before crisis forces desperate measures.

Building after crisis means building under panic—likely resulting in authoritarian solutions that ”work” immediately rather than privacy-preserving solutions that require years to deploy properly.

VIII. WHAT MUST BE BUILT

Portable Identity for democratic participation requires specific infrastructure components that can be developed within 2-5 year timeline:

1. National DID Registries (Distributed)

Not central databases—distributed ledgers showing DIDs exist without revealing identity information. Multiple independent parties maintain portions. No single party controls complete data.

Technical implementation: Blockchain or distributed hash table architecture with cryptographic proofs of inclusion without revealing content.

2. Timeline Attestation Networks

Networks of independent attestors (banks, universities, employers, professional organizations) that cryptographically sign timeline attestations for DIDs. Redundant, distributed, no single point of control or failure.

Attestors maintain cryptographic logs of their attestations, enabling verification without revealing underlying identity data. Market mechanisms create reputation incentives for honest attestation.

3. Zero-Knowledge Proof Protocols

Standardized protocols for proving citizenship, residency, age without revealing personal data. Must be implementable on consumer devices without specialized hardware to ensure accessibility.

Based on established cryptographic techniques (zk-SNARKs, zk-STARKs, bulletproofs) adapted for voting verification use case.

4. Threshold Credential Authorities

Coalitions of government departments, international observers, civil society organizations that jointly issue voting credentials. Requires cooperation of multiple parties—prevents single-authority control.

Implements threshold cryptography where credential creation requires M-of-N authorities, with N chosen large enough that corrupting M parties is economically infeasible.

5. Voting Systems Integration

Existing voting infrastructure (ballot scanners, voting machines, mail-in systems) must integrate with Portable Identity verification without compromising ballot secrecy or creating linkability between identity and vote.

Technical challenge: Separate credential verification (proving eligibility) from ballot casting (exercising vote) such that no entity can link the two.

6. International Standards

Cross-border recognition of Portable Identity credentials. Prevents balkanization where each nation’s verification system is incompatible with others, enabling:

- Citizens voting from abroad

- International election observation

- Cross-border democratic participation (where applicable)

- Standardized verification protocols

The Development Timeline:

- 2025: Specification finalization, initial pilot implementations

- 2026: Municipal-scale deployment, iterative improvement based on real-world usage

- 2027: Regional adoption, interoperability testing between different implementations

- 2028: National rollout becomes viable for early adopters with political will

- 2029-2030: Global availability if development proceeds without major obstacles

This assumes starting immediately. Each year of delay pushes timeline back and increases probability of crisis forcing inferior Scenario B solutions.

CONCLUSION: THE CHOICE THAT DETERMINES DEMOCRACY’S FUTURE

Every democracy faces the same choice in the next 2-5 years.

The verification crisis is not theoretical. It is here. Synthetic participants already constitute significant percentage of digital political activity measurable through multiple research methodologies. Current verification methods provide zero reliable information about whether voters are real humans because AI generates signals informationally indistinguishable from human-generated signals.

Democracy cannot function when you cannot verify voters. The mathematical aggregation of preferences requires knowing which signals originate from humans in the demos.

Three scenarios exist:

Scenario A: Continue current systems, watch verification collapse completely as synthetic participation reaches levels where democratic aggregation becomes mathematically meaningless.

Scenario B: Implement surveillance state infrastructure, verify voters through total monitoring, save democracy’s mathematics while destroying the privacy and freedom that make democracy worth having.

Scenario C: Deploy Portable Identity, verify voters cryptographically through distributed attestation without surveillance, preserve both democratic function and human freedom.

The choice is not ”whether to verify voters”—that’s non-negotiable for democracy to function mathematically.

The choice is: verify through surveillance (Scenario B) or verify through cryptography (Scenario C)?

Scenario A (do nothing) is choosing Scenario B by default—because when crisis hits and democratic legitimacy collapses, desperate measures win political support regardless of freedom costs.

Portable Identity represents democracy’s last chance to preserve both effectiveness and freedom.

Not because it’s elegant technology or interesting cryptography. Because it’s the only architecture that makes voter verification mathematically sound without creating surveillance infrastructure that inevitably gets weaponized for political control.

The Synthetic Electorate is here. Growing exponentially. Indistinguishable from real voters through observation.

Either we deploy cryptographic verification of personhood before the crisis forces surveillance solutions.

Or we lose democracy—not dramatically through coup or revolution, but through slow erosion of trust in electoral outcomes that nobody can verify, until the forms of democracy persist but the substance has died.

The window is 2-5 years.

The infrastructure exists in cryptographic research and early implementations.

The choice is clear.

The only question: will democracies implement Portable Identity before verification collapse becomes irreversible?

For the protocol infrastructure enabling verified democratic participation: cascadeproof.com

For the portable identity foundation democracy requires: portableidentity.global

About This Analysis

This article establishes why democracy faces existential verification crisis as AI makes voters indistinguishable from bots through perfect behavioral simulation. Analysis draws on Shannon’s information theory (signal-noise collapse under perfect channel saturation), democratic theory (electoral integrity constraints requiring verified unique participants), and cryptographic verification principles to demonstrate why all current voter verification methods (documents, biometrics, behavior, social graphs) fail completely under AI-driven simulation. Three scenarios are analyzed: status quo leading to democratic illegitimacy through unmeasurable synthetic participation, authoritarian surveillance preserving verification while destroying freedom, and Portable Identity providing privacy-preserving cryptographic verification through distributed attestation. The timeline (2-5 years) represents the window before infrastructure choices lock in for decades through path dependency and network effects. Portable Identity architecture using self-sovereign DIDs, distributed timeline attestation with reputation-incentivized attestors, zero-knowledge eligibility proofs, threshold credentials requiring multi-party cooperation, and cryptographic nullifiers provides the only viable solution preserving both democratic function and human freedom when synthetic participants become informationally indistinguishable from real voters through observation-based verification methods.

Democratic theoretic foundations referenced:

- Arrow, K. (1951). ”Social Choice and Individual Values” – Preference aggregation requirements

- Buchanan, J. & Tullock, G. (1962). ”The Calculus of Consent” – Democratic decision theory

- Dahl, R. (1989). ”Democracy and Its Critics” – Electoral integrity requirements

- Shannon, C. (1948). ”A Mathematical Theory of Communication” – Signal verification limits under noise

- Chaum, D. (1981). ”Untraceable Electronic Mail” – Anonymous credential foundations

- Brands, S. (1999). ”Rethinking Public Key Infrastructures” – Privacy-preserving credentials

Rights and Usage

All materials published under PortableIdentity.global — including definitions, protocol frameworks, semantic standards, research essays, and theoretical architectures — are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to PortableIdentity.global.

How to attribute:

- For articles/publications:

“Source: PortableIdentity.global” - For academic citations:

“PortableIdentity.global (2025). [Title]. Retrieved from https://portableidentity.global”

2. Right to Adapt

Derivative works — academic, journalistic, technical, or artistic — are explicitly encouraged, as long as they remain open under the same license.

Portable Identity is intended to evolve through collective refinement, not private enclosure.

3. Right to Defend the Definition

Any party may publicly reference this manifesto, framework, or license to prevent:

- private appropriation

- trademark capture

- paywalling of the term “Portable Identity”

- proprietary redefinition of protocol-layer concepts

The license itself is a tool of collective defense.

No exclusive licenses will ever be granted.

No commercial entity may claim proprietary rights, exclusive protocol access, or representational ownership of Portable Identity.

Identity architecture is public infrastructure — not intellectual property.