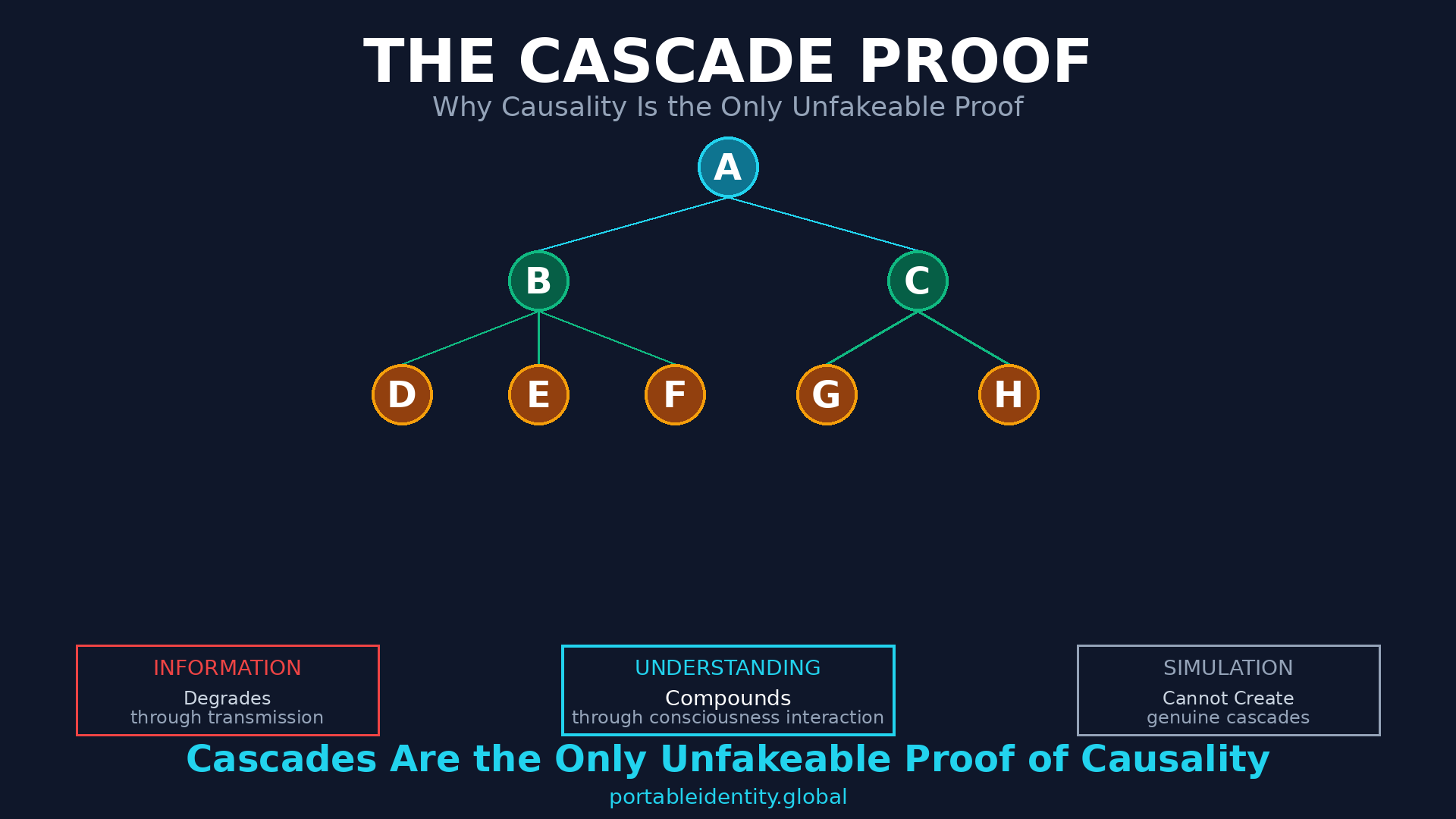

The Cascade Proof

Why Causality Is the Only Unfakeable Proof (And How Portable Identity Makes It Measurable) AI can fake everything except one thing: genuine multi-generational cascade effects that prove sustained causality over time. This is not a technical limitation. This is information-theoretic impossibility. And it changes everything—from how we prove consciousness exists, to how legal systems establish … The Cascade Proof